Case Study

Field Choreography Tool and Animations Developed at WET 2014

Below is a case study of a choreography tool and animations that I conceptualized, designed and developed mostly by myself between concurrent projects.

Version 1 (1-week timeline)

Day 1: Background and Research

On the first day of this project, I received a briefing about the project from the designer Jules Moretti who just returned from a trip to China. He described the interaction with the client and the project objectives.

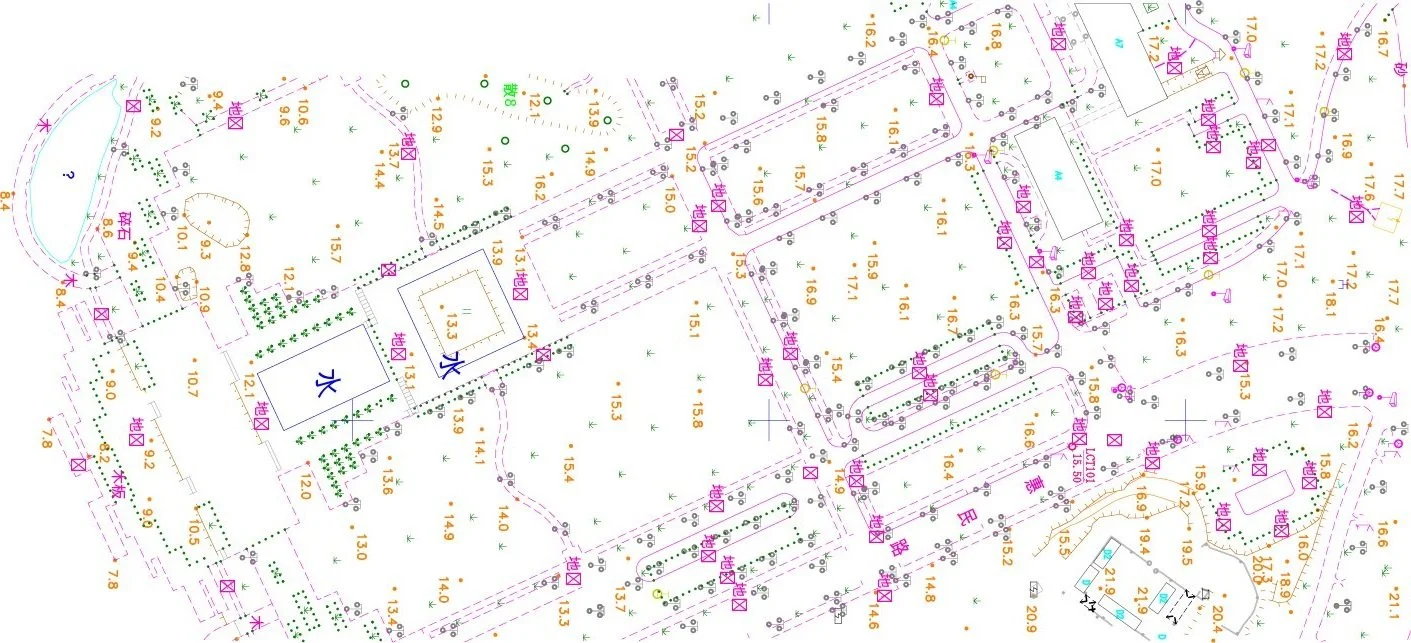

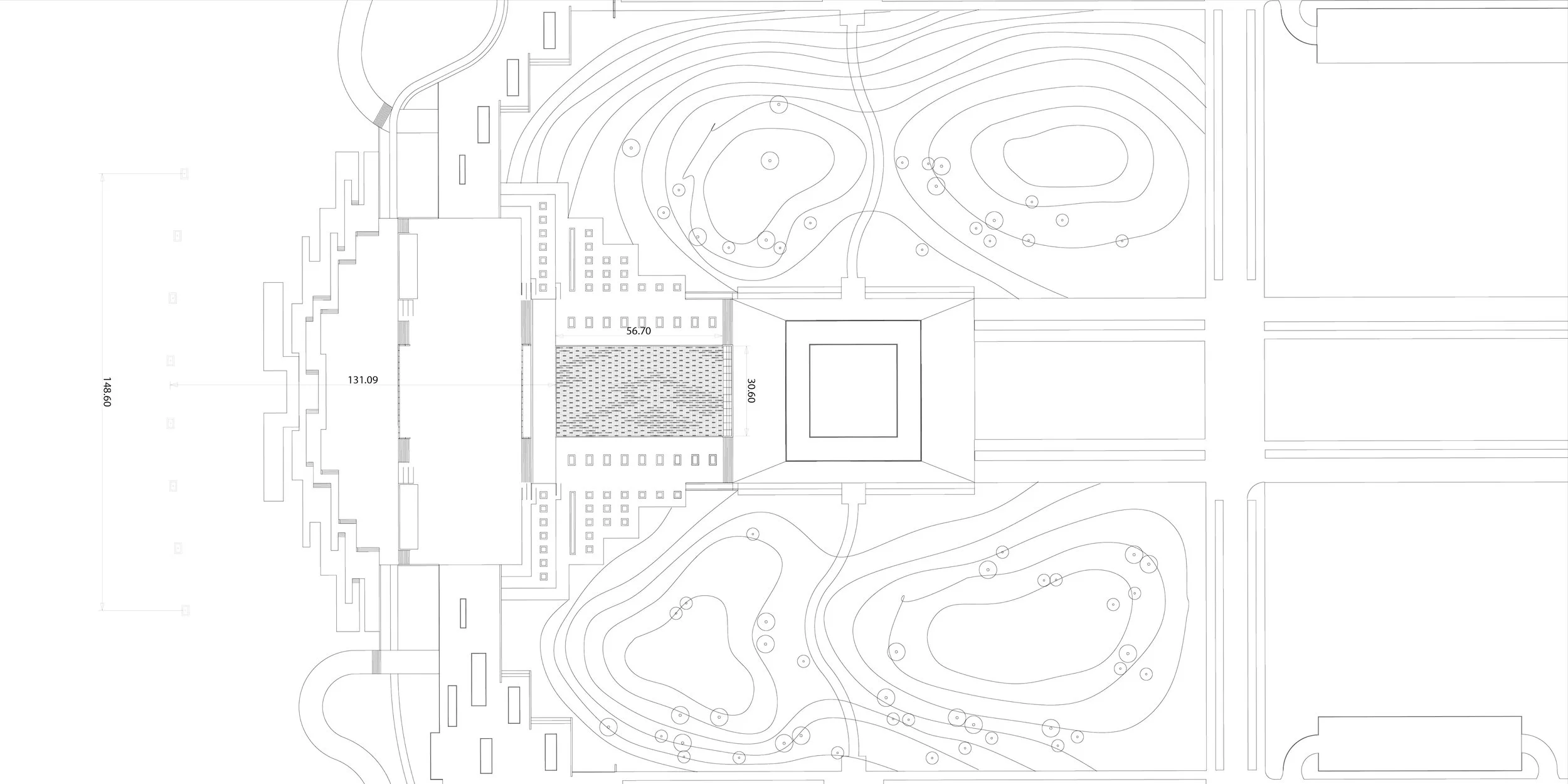

The client came to WET to conceptualize and build a water feature for a courtyard in a municipal complex by a lake. You can see the courtyard/plaza above, photographed during a site visit by Jules. The plaza is also illuminated in the scale model on the right. You can see a diagram of the layout below provided by the client.

Upon hearing that the design team at WET wanted to propose a grid of 10,000 nozzles, I immediately suggested using a “warp and weft” pattern for the tiles from one of my favorite books: 10,000 Things by art historian Lothar Ledderose. I am fascinated by Chinese art and art history. Studying this field has inspired a life-long interest in the relationship between module, mass production and artistic expression. Jules generated the tile pattern above right and below from the reference image I provided.

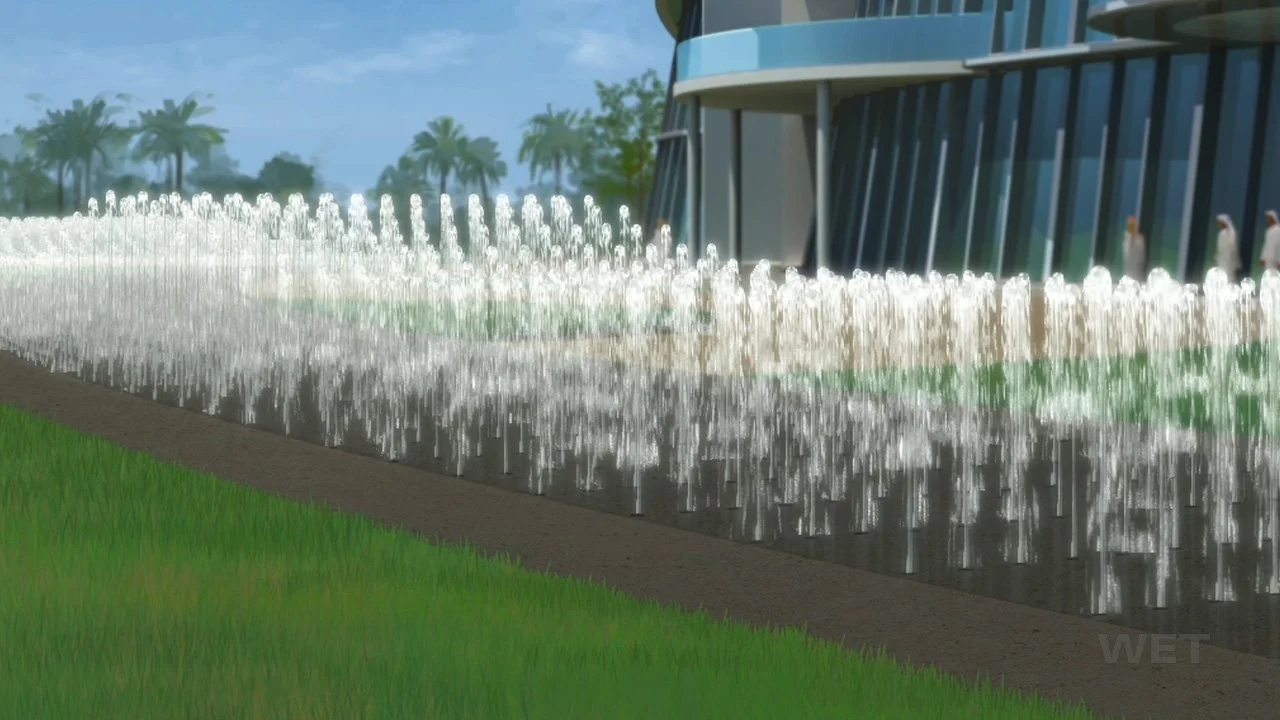

The proposed water device is called an “analog sprite nozzle” by WET. This device can either fire as a burst of water, or it can be choreographed to produce a continuous stream of water at a variable height. The stream can be maintained indefinitely at a given height, drop off suddenly, change height, etc. The shape of the stream itself is something crafted and beautiful as you can see in the pictures above.

Day 2-3: Determine Visualization Strategy (Texture to Choreography)

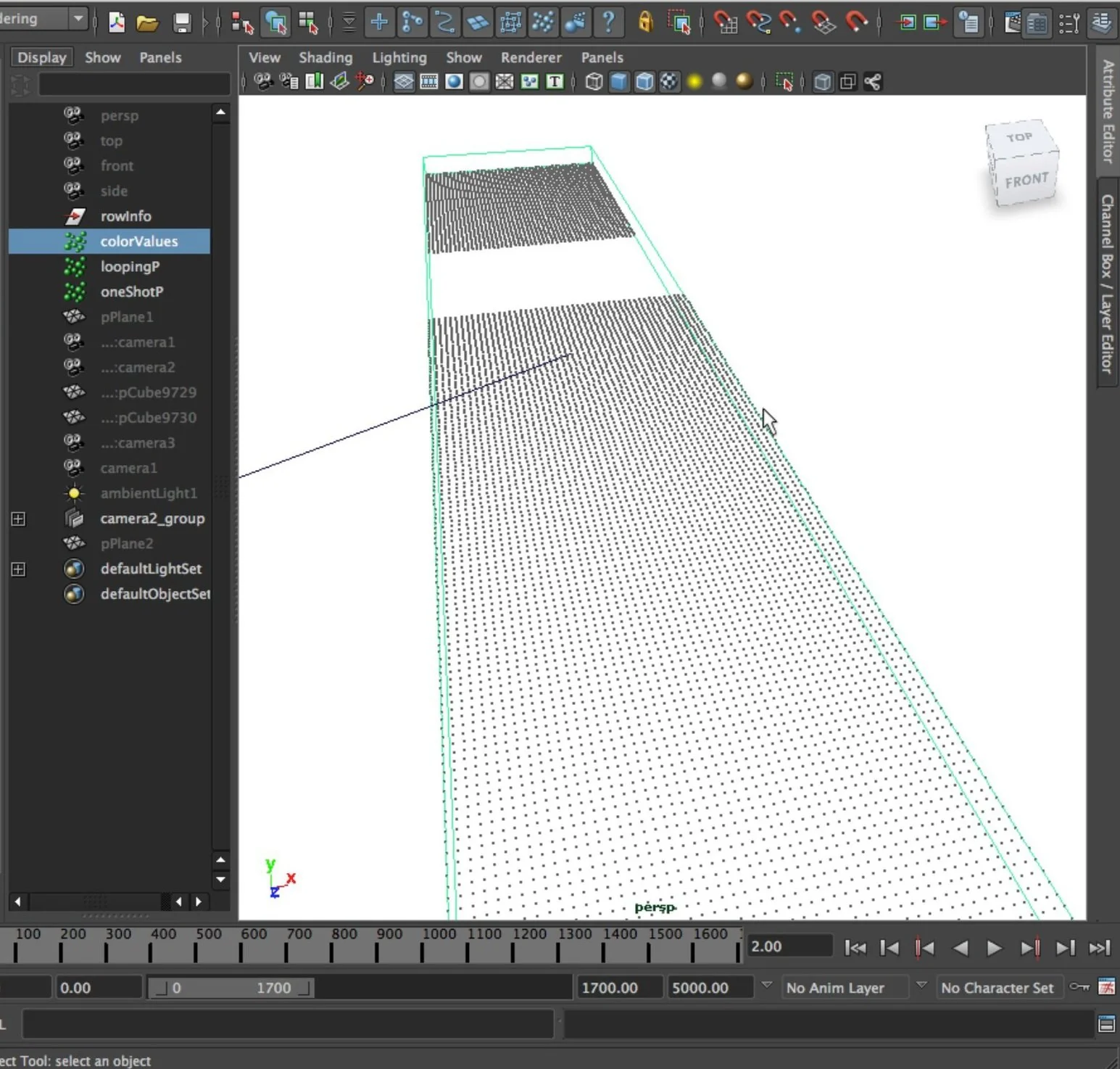

My task was to generate a concept animation to communicate the water feature to the client in a few days. At this time WET was using primarily Maya, so I wanted to figure out a way to visualize this feature in this software. I decided to sample pixel data from a texture as a choreography strategy. And considering there may be 10,000 nozzles firing at once, I thought it would be safer to render them as sprite sequences instead of 3D geometry. Below you can see a MEL script I wrote to create a node network centered around an “arrayMapper” node I discovered that maps pixel data from an incoming texture every frame to an array.

Animation Expressions

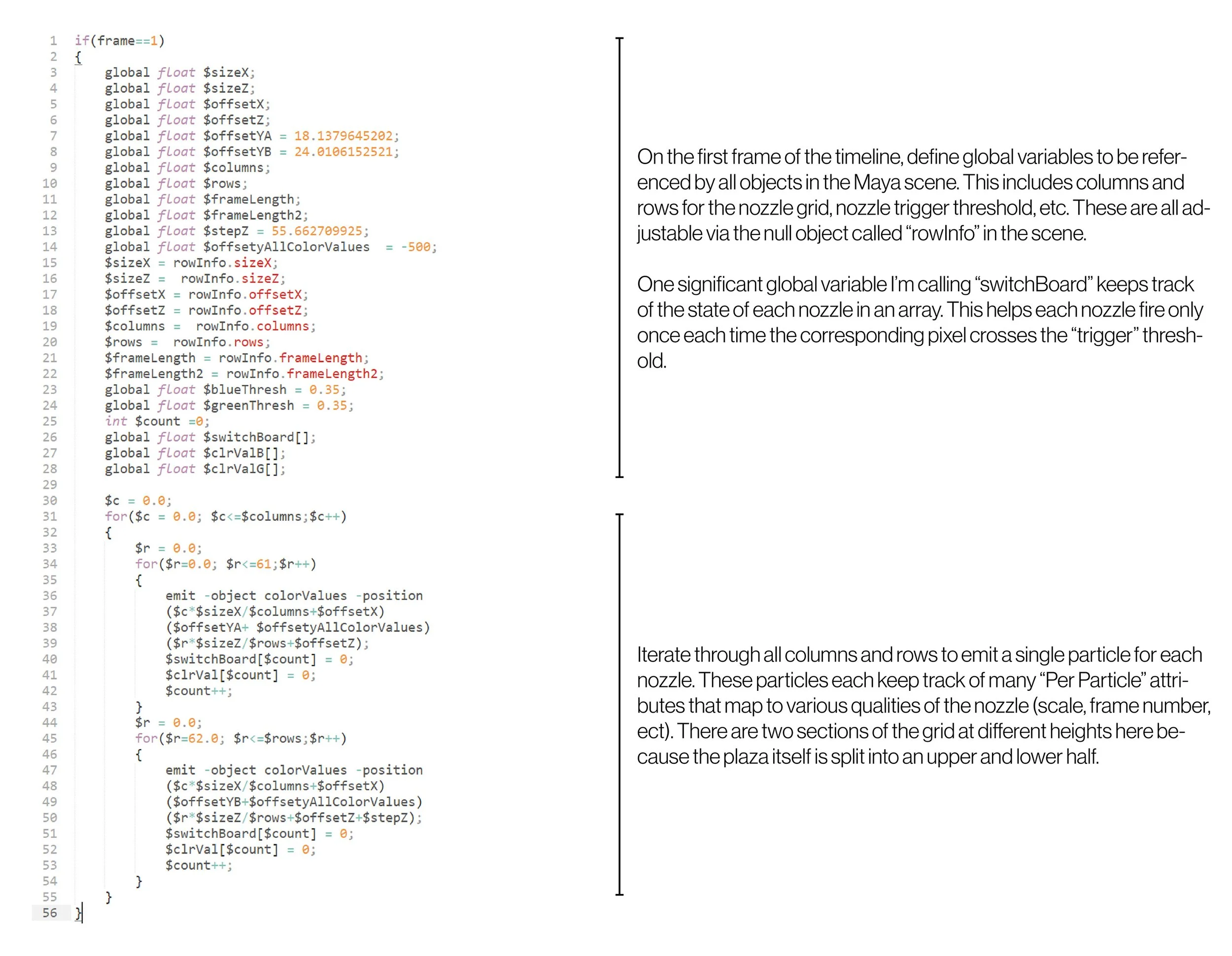

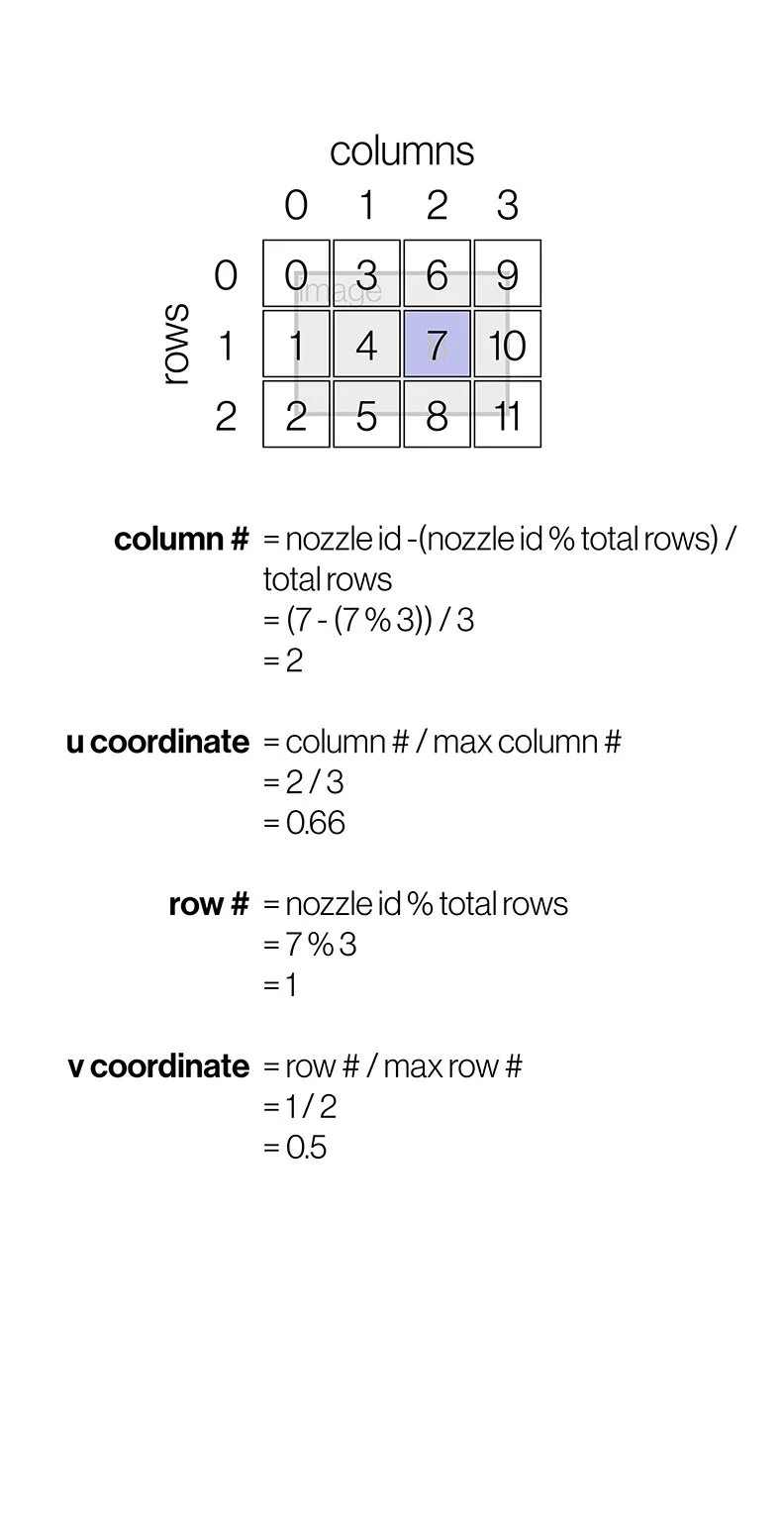

Expressions in Maya are snippets of code that execute every frame of an animation. I wrote the following expressions to use the incoming texture data to manage nozzle properties like emission, height and frame number. The first expression creates the virtual grid of nozzles and the associated physical properties.

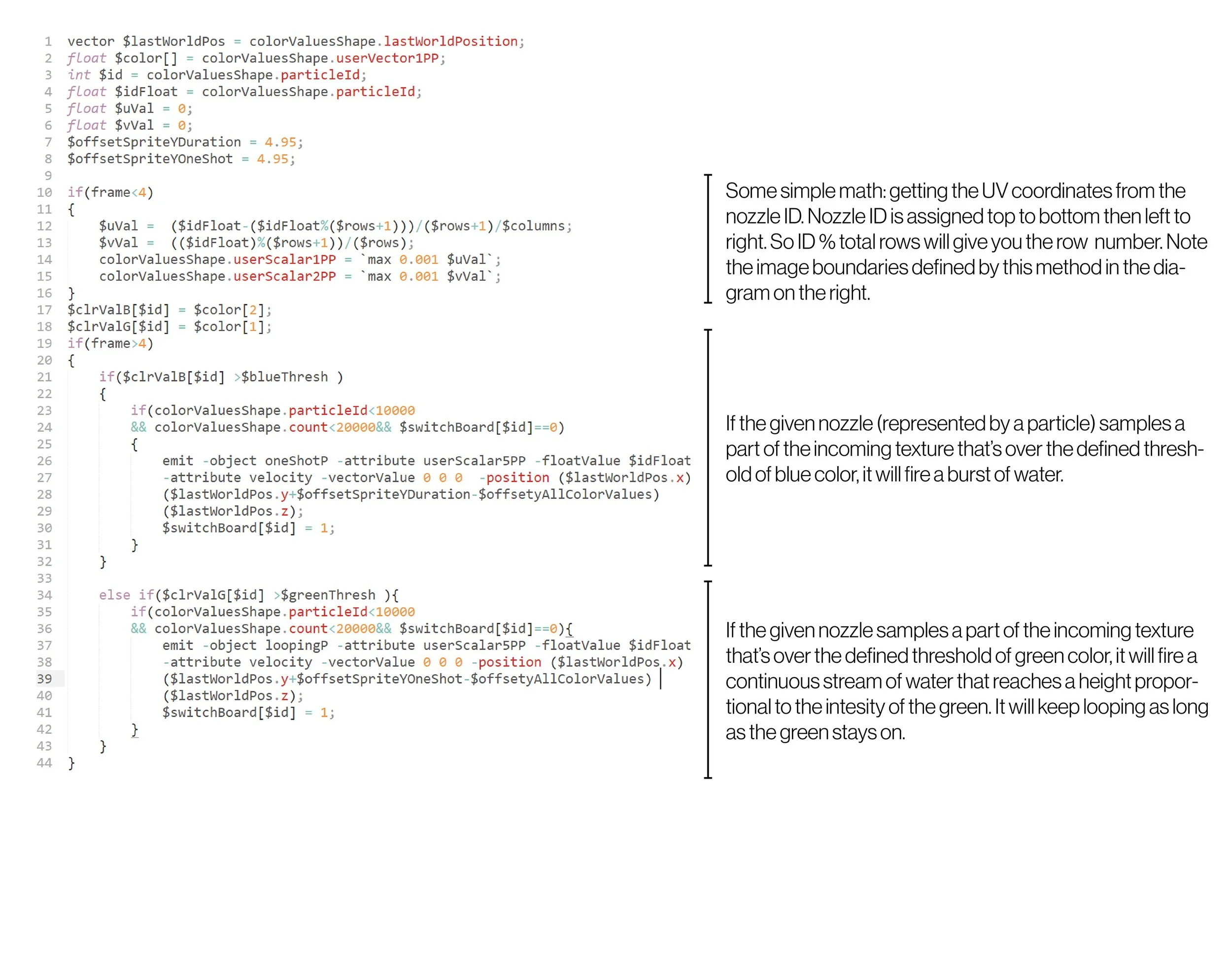

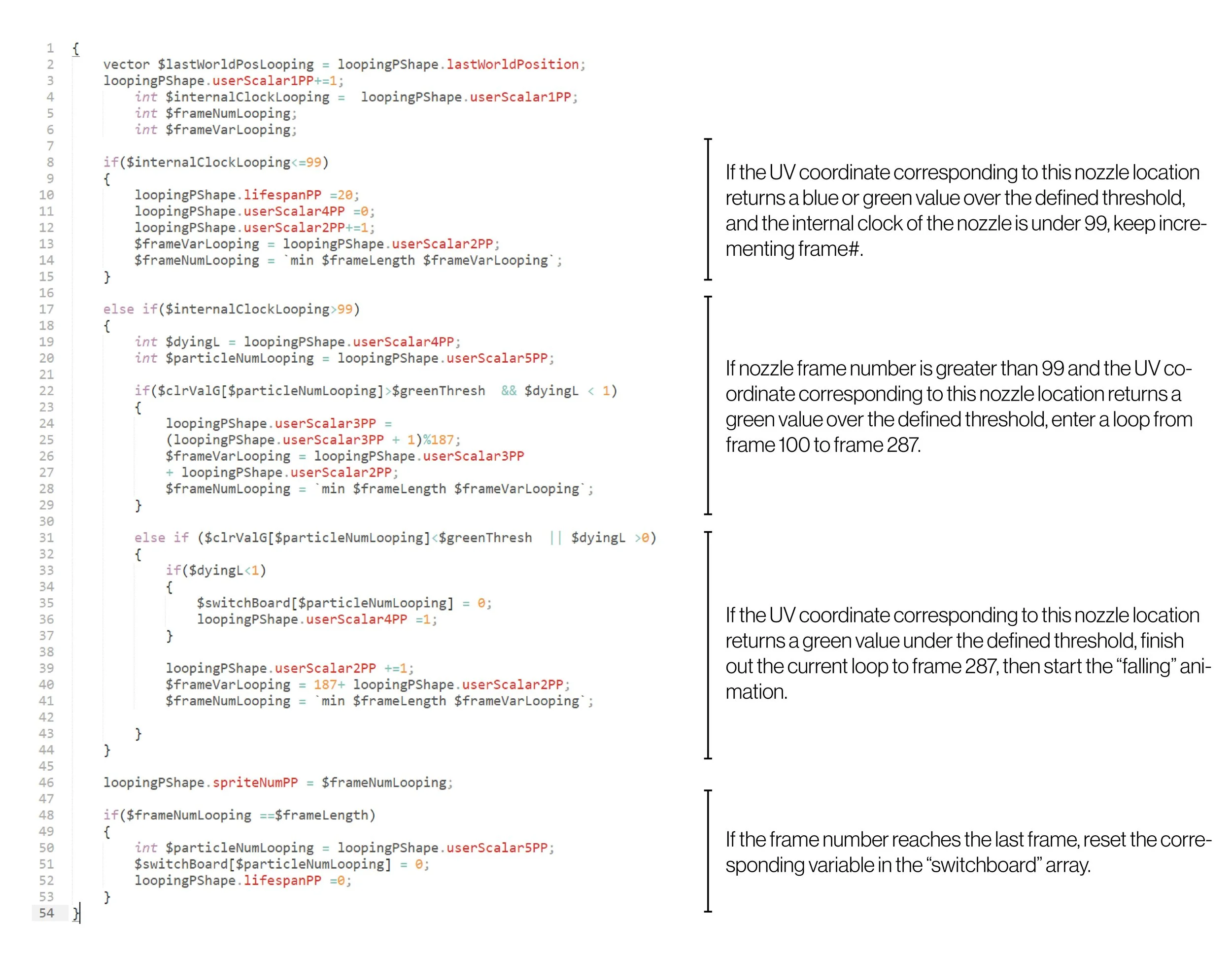

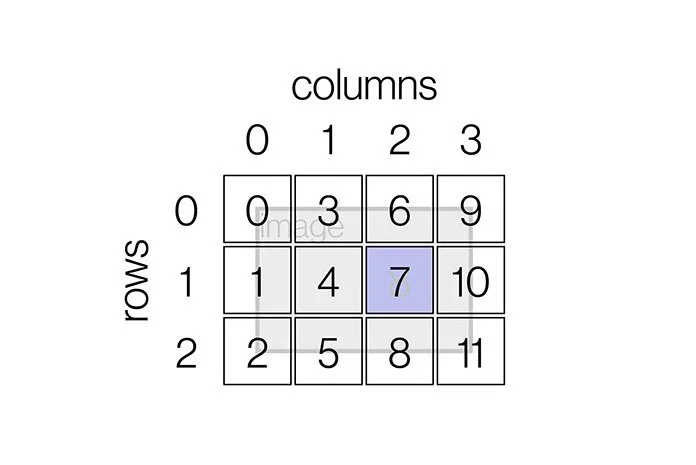

The following expression calculates every nozzle’s UV coordinate from its particle ID # and samples the texture at that coordinate.

The following expression manages what frame number of the sprite animation each nozzle should display based on the incoming texture data.

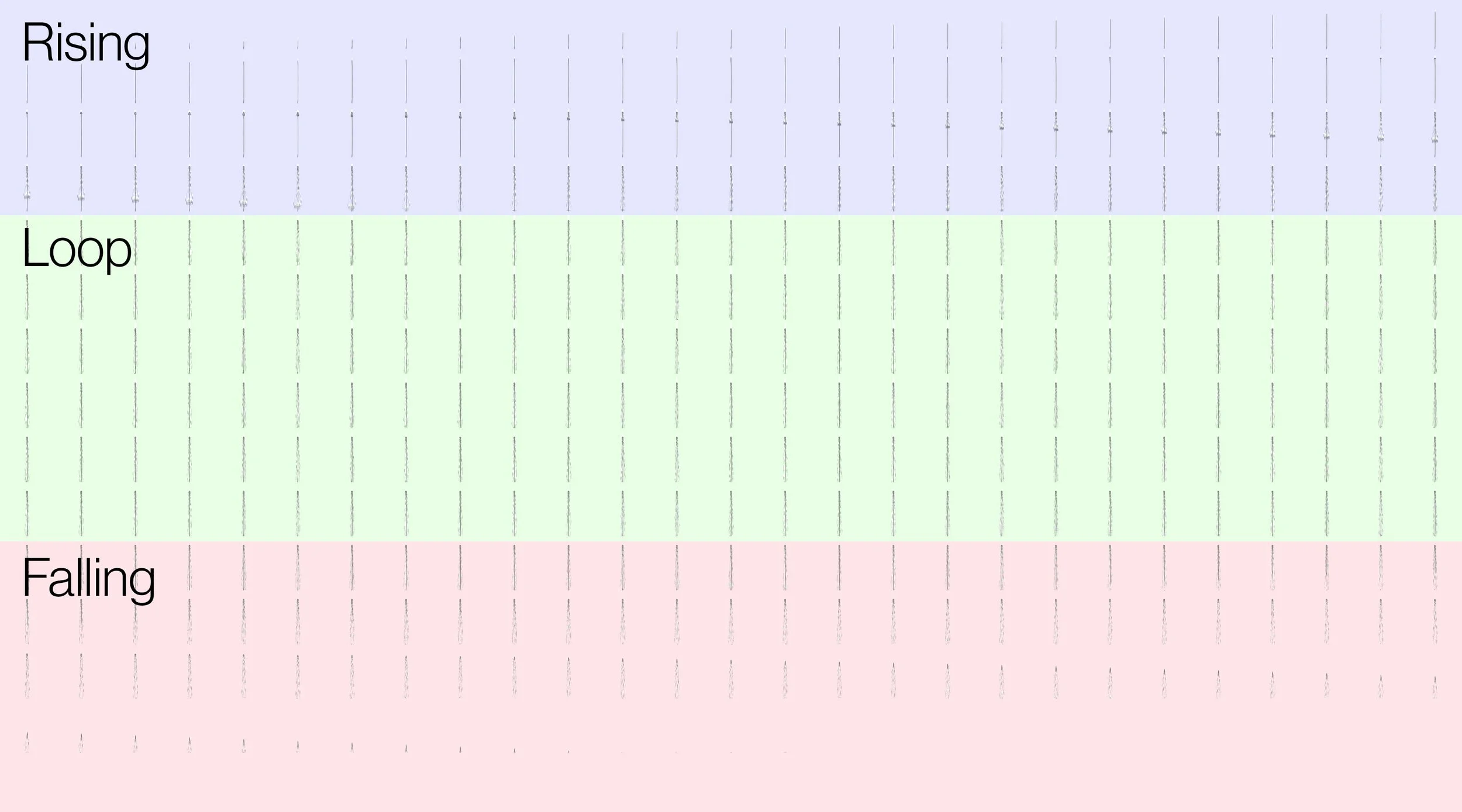

I asked my coworker ShiaChao Chiou to generate this animation of a single “analog” nozzle in RealFlow that has 3 distinct parts: rising, looping, and falling. This nozzle animation exists as a set of baked geometry, and as a sequence of 2D renders. The 100-frame animation on the right is the “burst”

Days 4-5: Animation Process

After setting up this system to take a sequence of still images and produce water choreography, I generated a wide range of input animations to explore the choreographic possibilities of a grid. At this point the exploration was primarily aesthetic: What is legible from different perspectives? What is engaging and exciting for people walking through the field of nozzles? In the video below you can see both blue and green input textures that produce different choreographies. It’s worth mentioning that this is a real-time capture of the Maya scene: the system needed to be lightweight so I could time the choreography precisely to music.

Days 6-7: Rendering and Montage

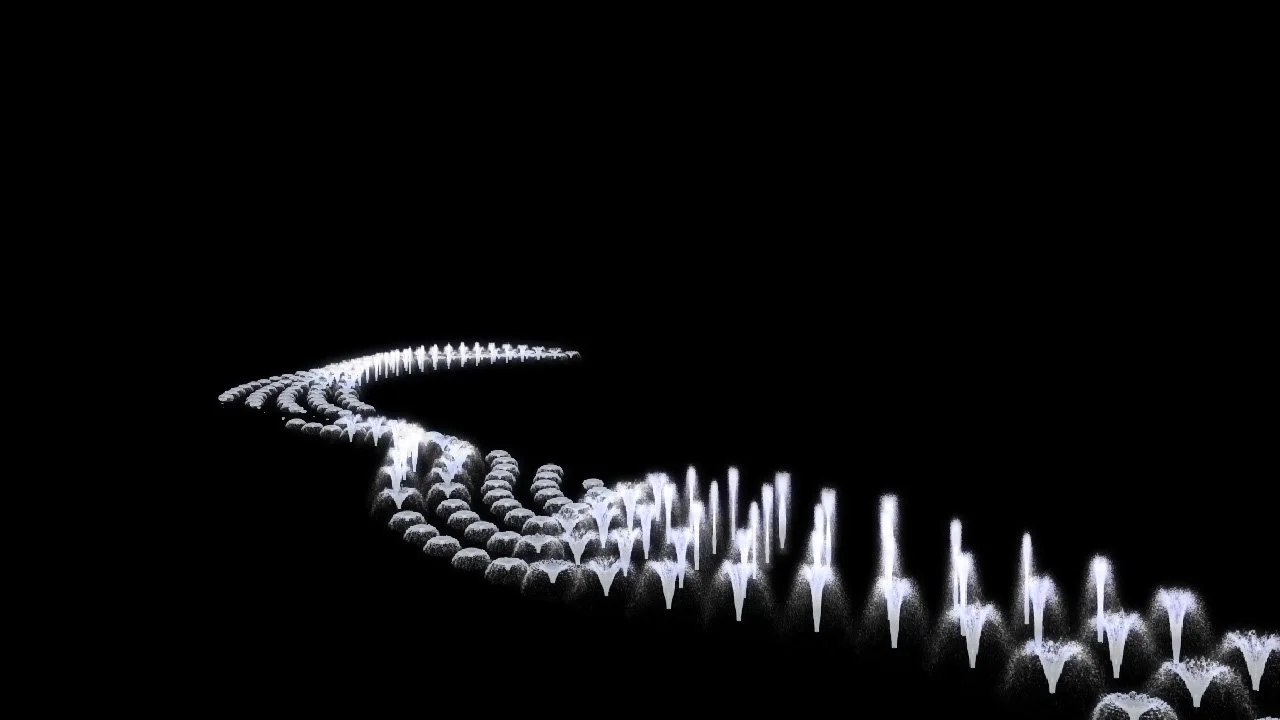

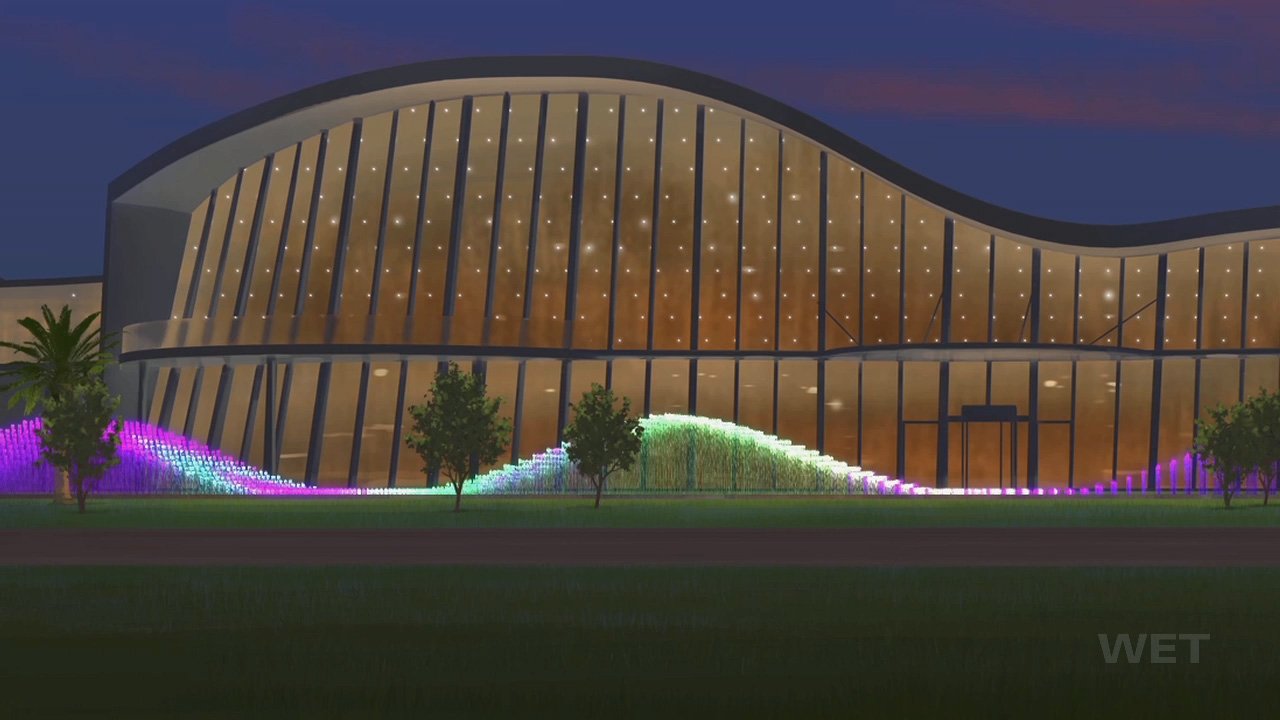

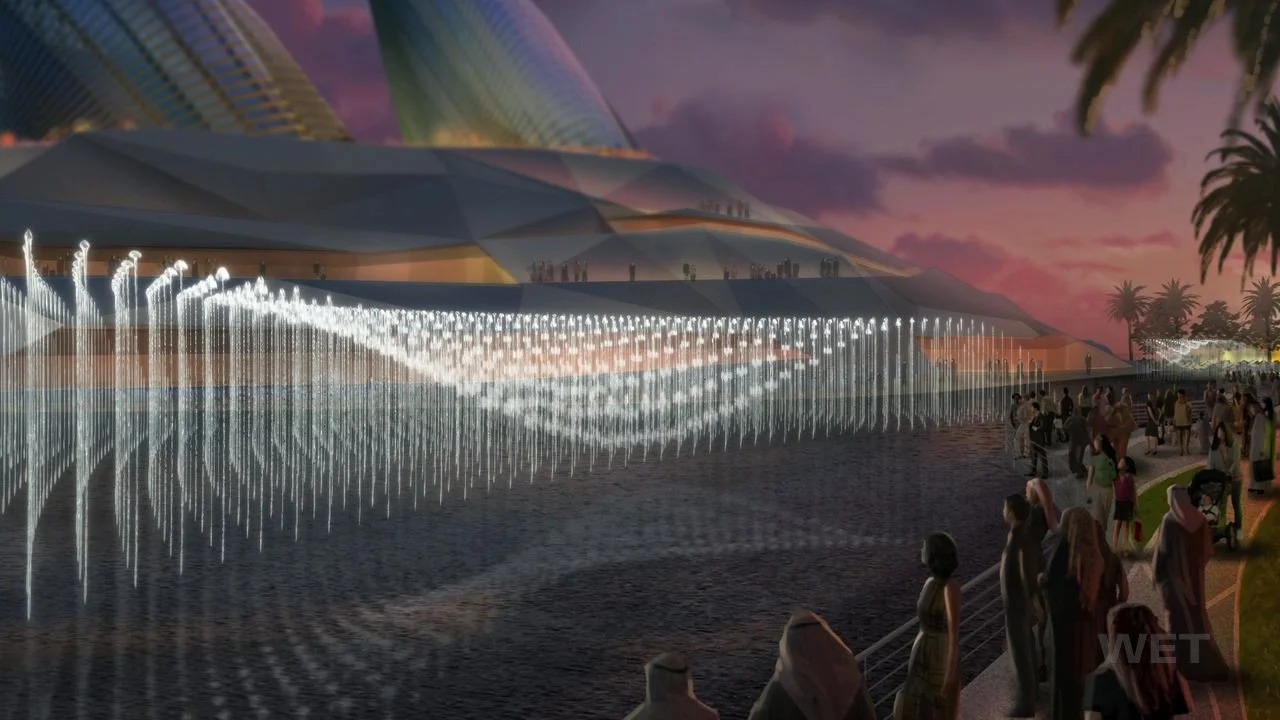

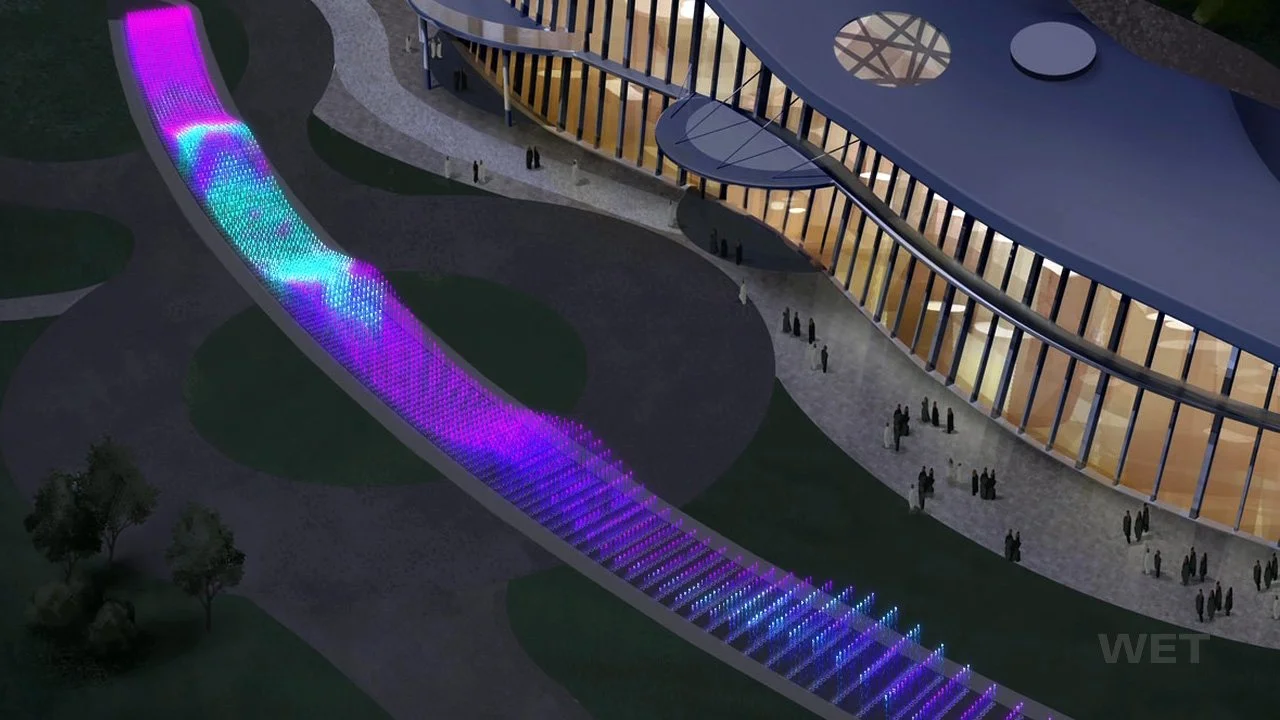

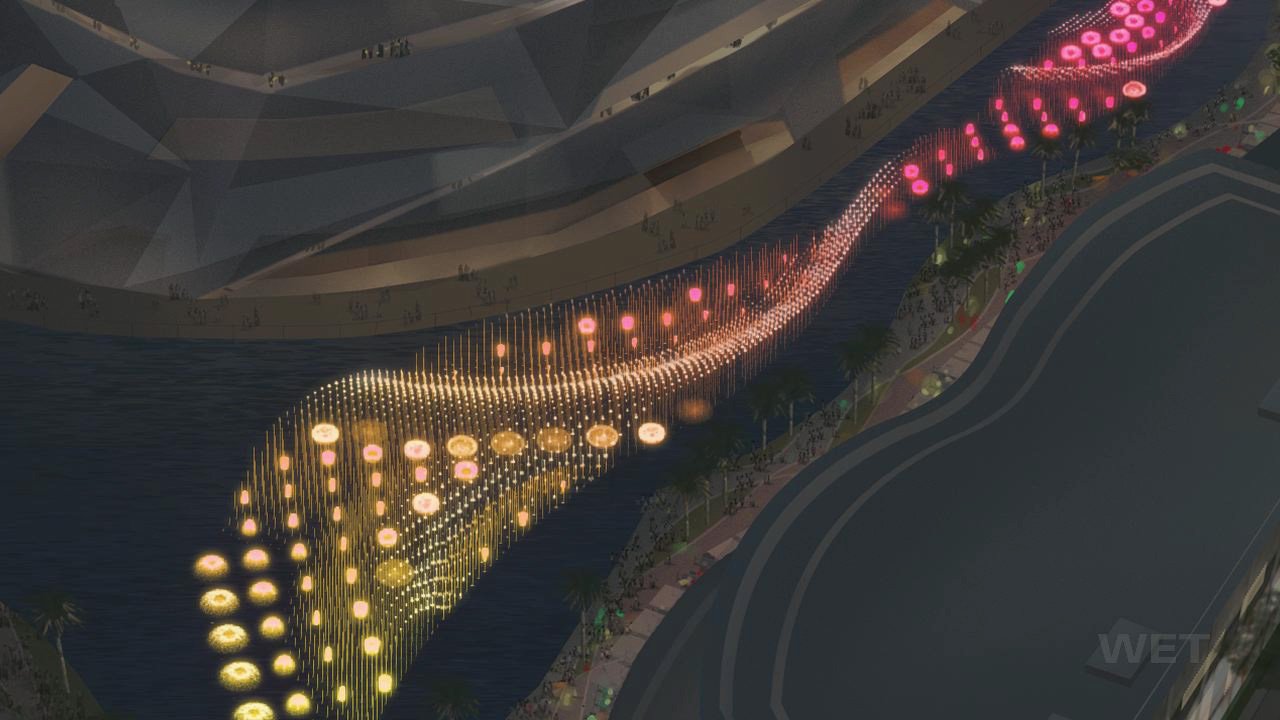

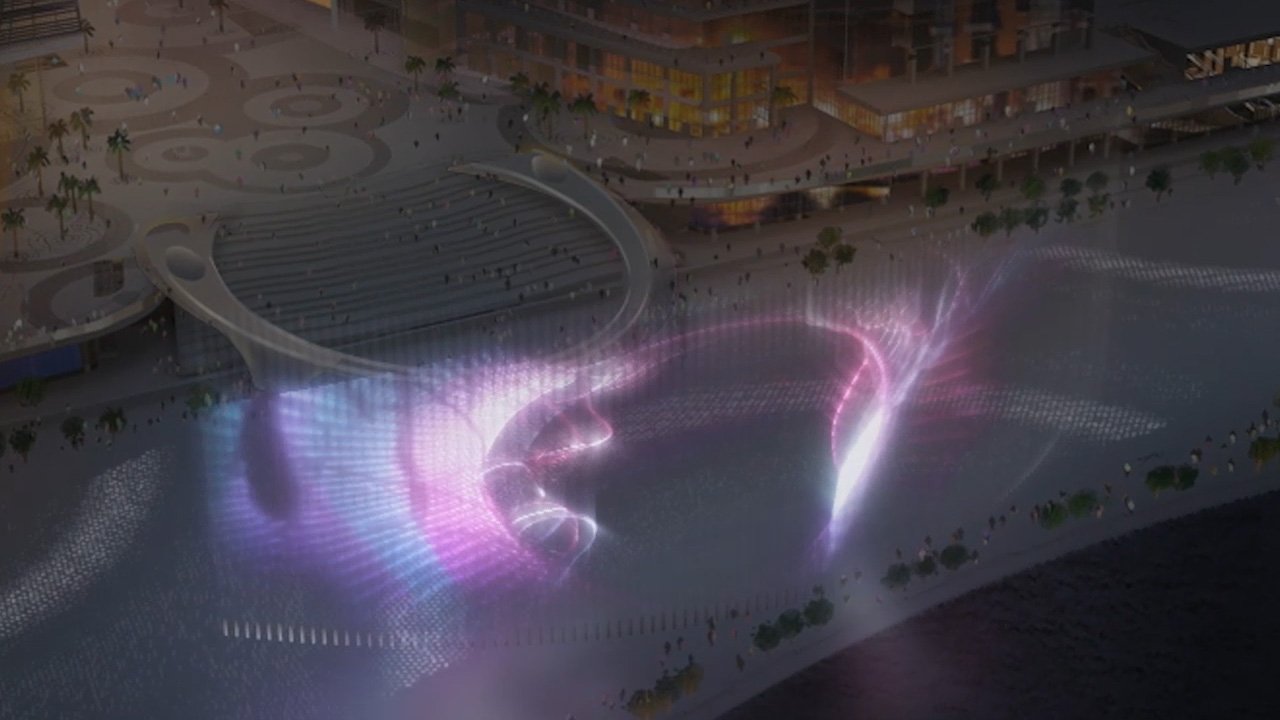

The final step of this process is to produce renders to be incorporated into an animated montage. Below you can see clips from this montage that was presented to the client. I created all the animations you see in this montage using the methods described above, utilizing mostly Maya and After Effects. The backgrounds were painted by illustrator Yong Park at WET.

Day 2-3: Determine Visualization Strategy (Texture to Choreography)

My task was to generate a concept animation to communicate the water feature to the client in a few days. At this time WET was using primarily Maya, so I wanted to figure out a way to visualize this feature in this software. I decided to sample pixel data from a texture as a choreography strategy. And considering there may be 10,000 nozzles firing at once, I thought it would be safer to render them as sprite sequences instead of 3D geometry.

Below you can see a MEL script I wrote to create a node network centered around an “arrayMapper” node I discovered that maps pixel data from an incoming texture every frame to an array.

Animation Expressions

Expressions in Maya are snippets of code that execute every frame of an animation. I wrote the following expressions to use the incoming texture data to manage nozzle properties like emission, height and frame number. The first expression creates the virtual grid of nozzles and the associated physical properties.

The following expression calculates every nozzle’s UV coordinate from its particle ID # and samples the texture at that coordinate.

The following expression manages what frame number of the sprite animation each nozzle should display based on the incoming texture data.

I asked my coworker ShiaChao Chiou to generate this animation of a single “analog” nozzle in RealFlow that has 3 distinct parts: rising, looping, and falling. This nozzle animation exists as a set of baked geometry, and as a sequence of 2D renders. The 100-frame animation on the right is the “burst”

Days 4-5: Animation Process

After setting up this system to take a sequence of still images and produce water choreography, I generated a wide range of input animations to explore the choreographic possibilities of a grid. At this point the exploration was primarily aesthetic: What is legible from different perspectives? What is engaging and exciting for people walking through the field of nozzles? In the video below you can see both blue and green input textures that produce different choreographies. It’s worth mentioning that this is a real-time capture of the Maya scene: the system needed to be lightweight so I could time the choreography precisely to music.

Days 6-7: Rendering and Montage

The final step of this process is to produce renders to be incorporated into an animated montage. Below you can see clips from this montage that was presented to the client. I created all the animations you see in this montage using the methods described above, utilizing mostly Maya and After Effects. The backgrounds were painted by illustrator Yong Park at WET.

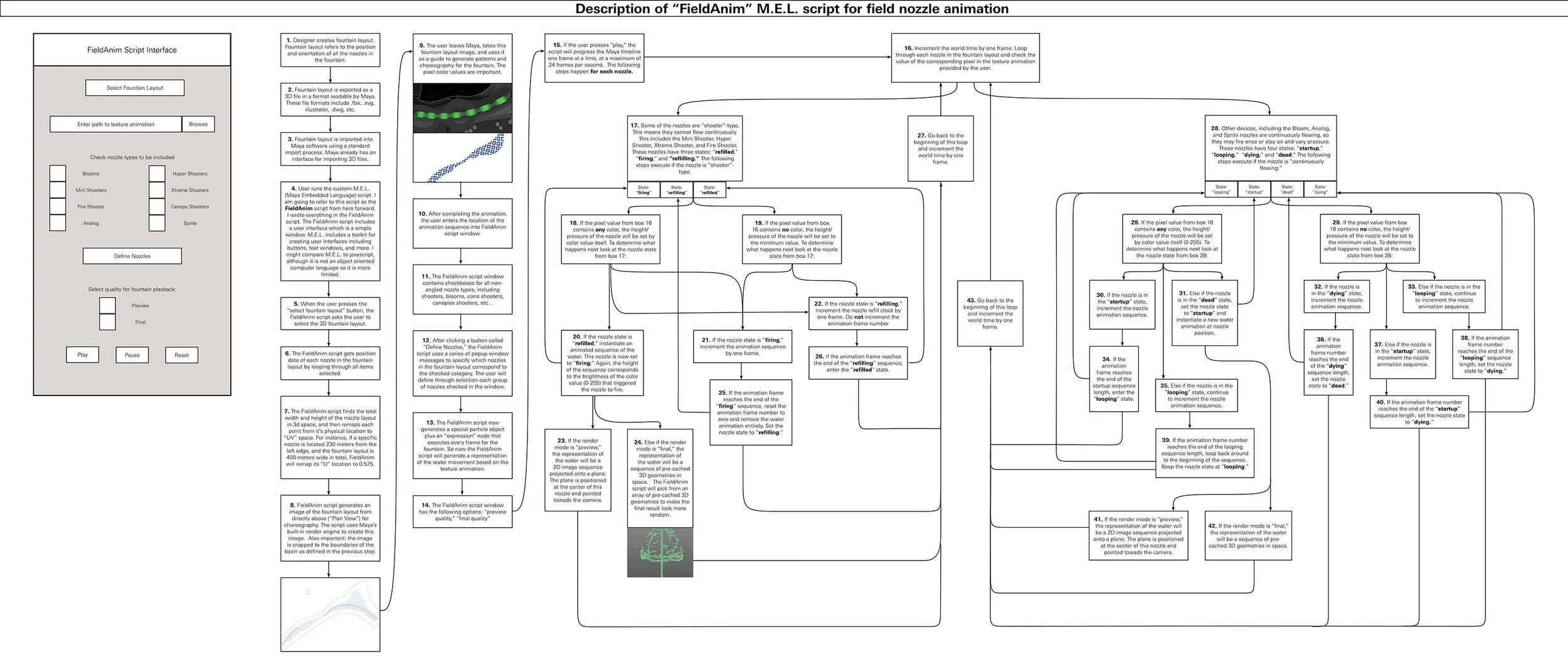

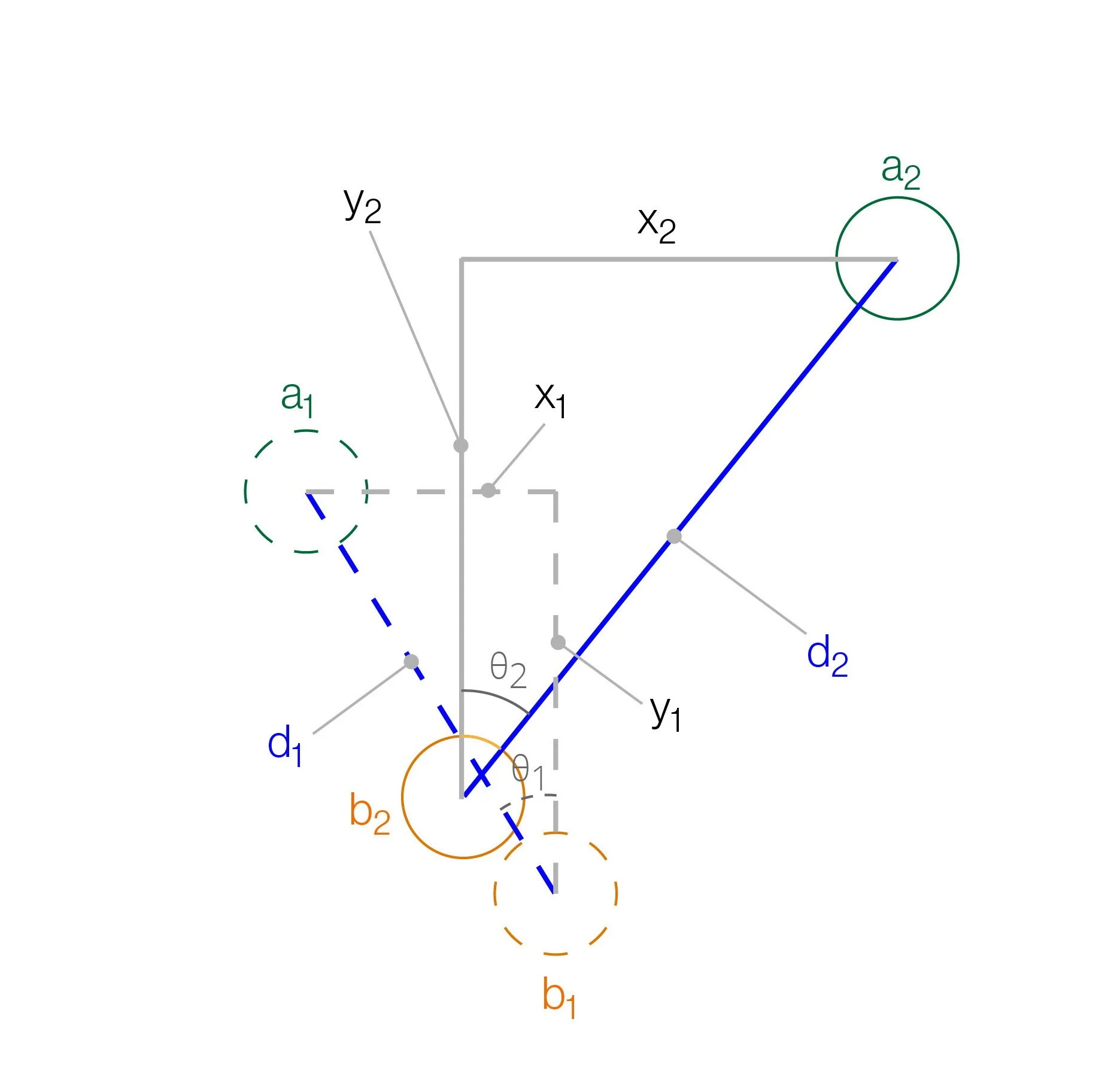

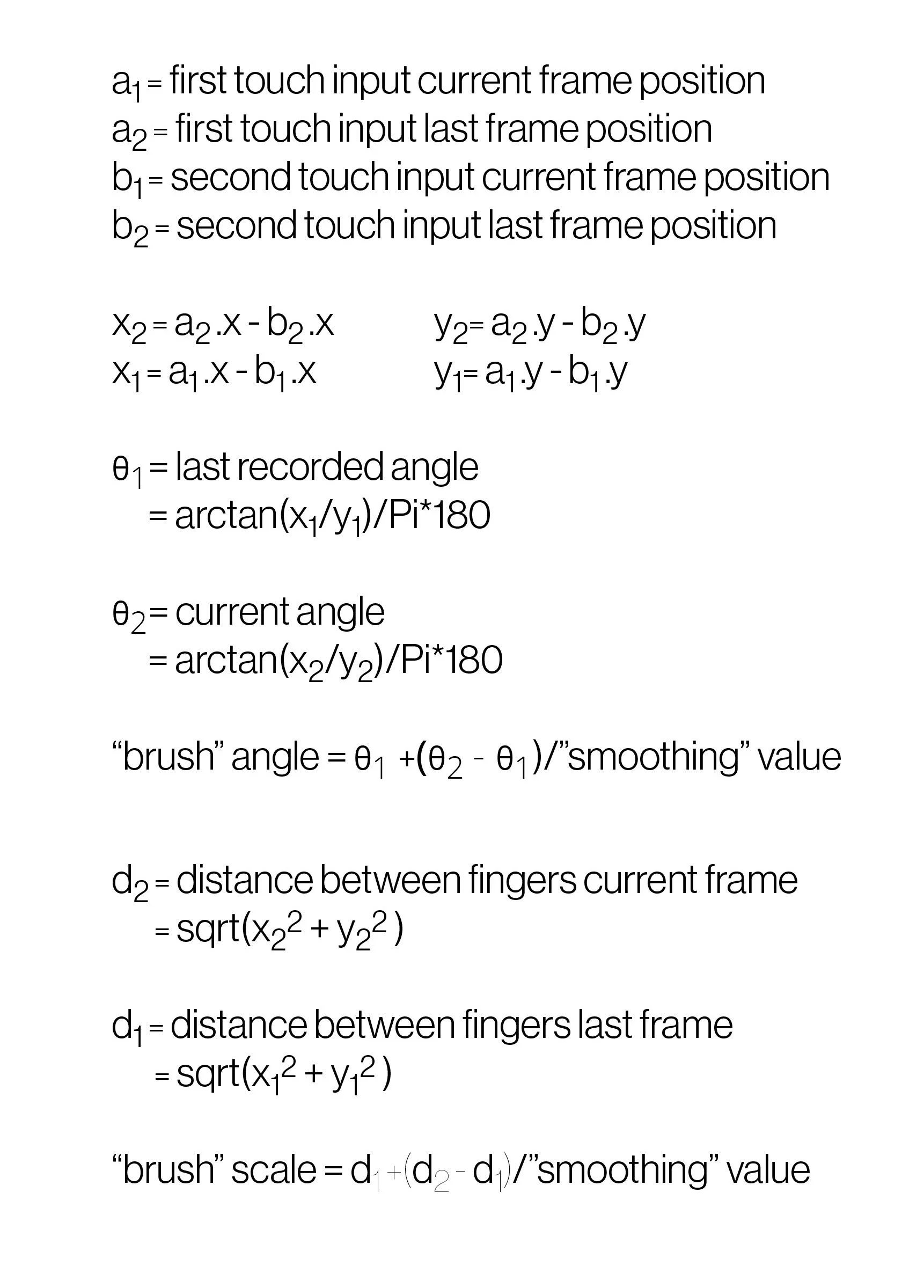

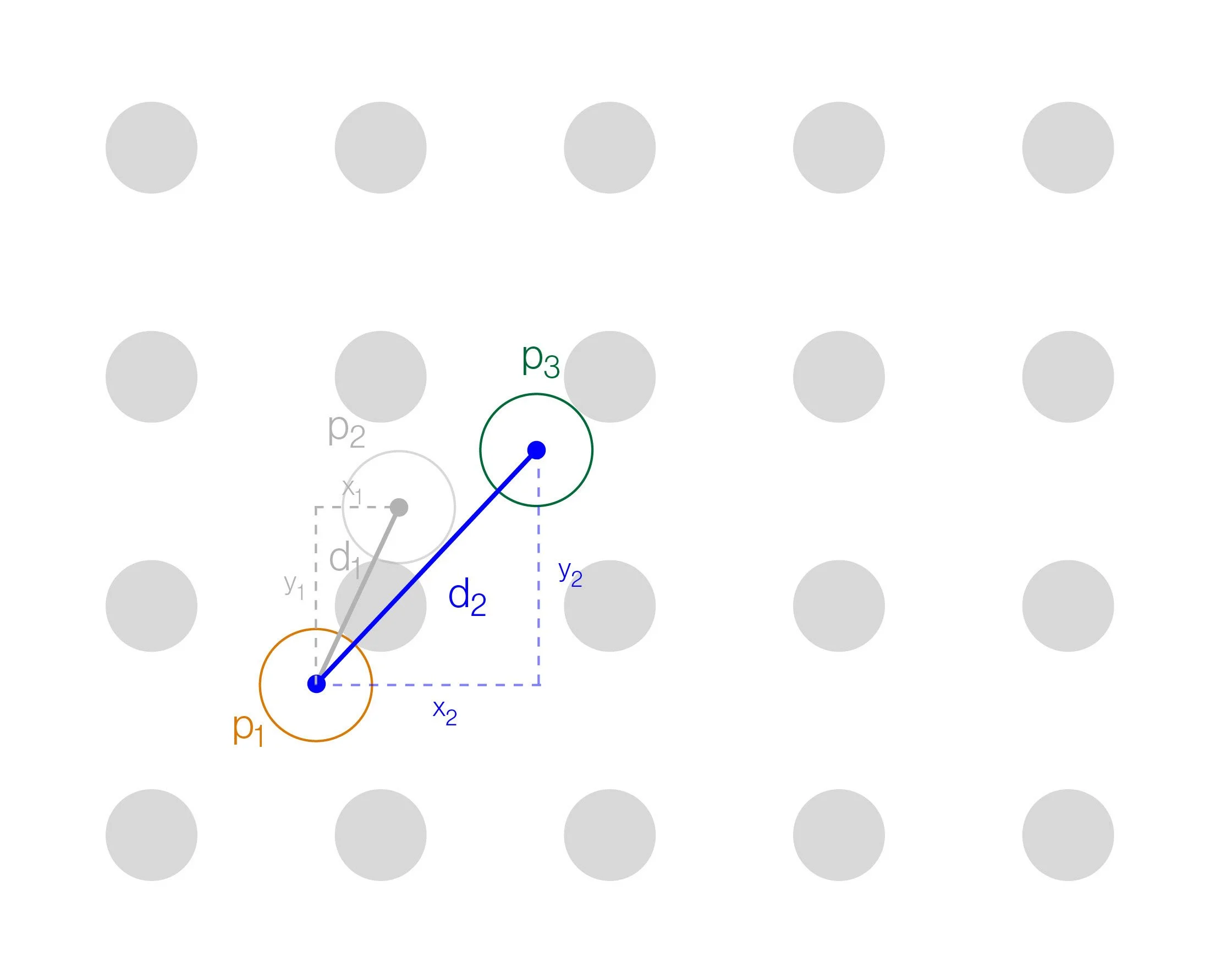

After the animation was complete, I created a description of my methodology and diagrams. I made the following diagram describing the function of the code to be submitted in a patent application.

After developing this approach to choreography in about a week, I applied it to dozens of other projects. I created all water expressions you see below. The backgrounds are painted by the illustration team at WET. The examples below include Saadiyat island in Abu Dhabi, the Saudi Crown Prince water feature (Elan), and Meydan One development in Dubai. The Crown Prince feature and Saadiyat were approved and are currently under construction. Each individual project took about 1 week from concept to execution and animation.

Version 2 (total timeline several weeks)

Expanding and Applying Tool To Multiple Projects

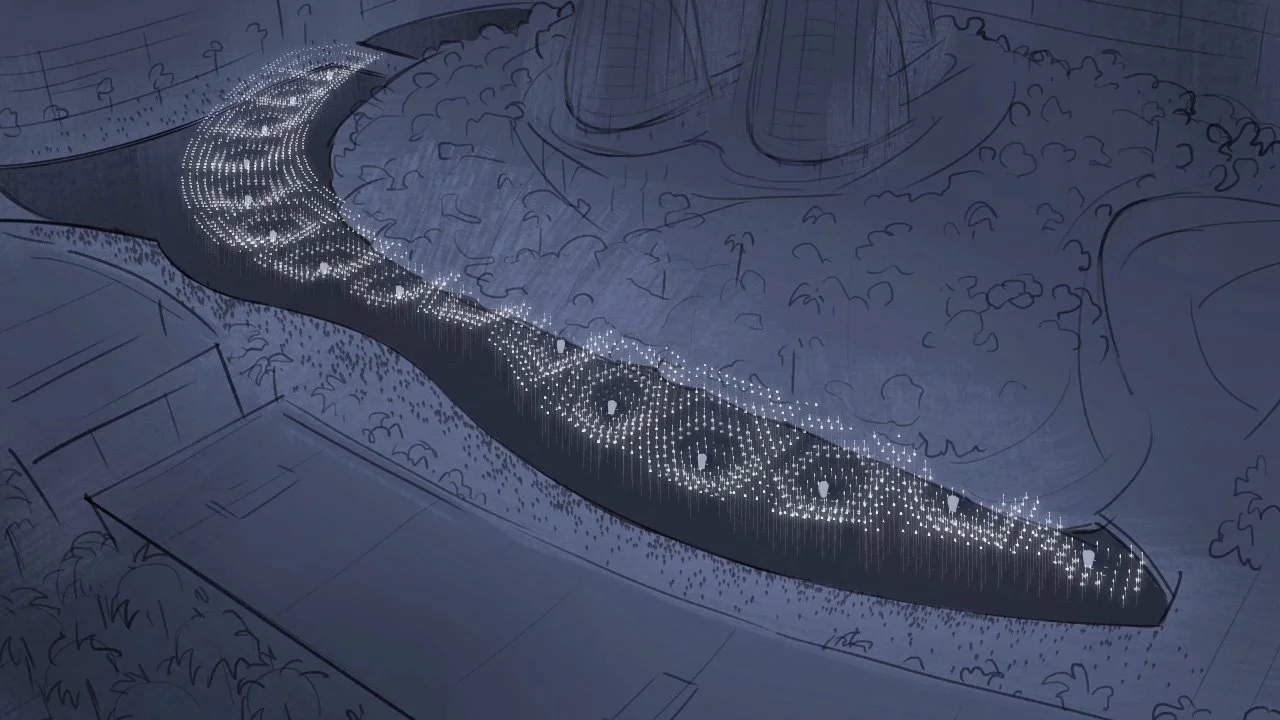

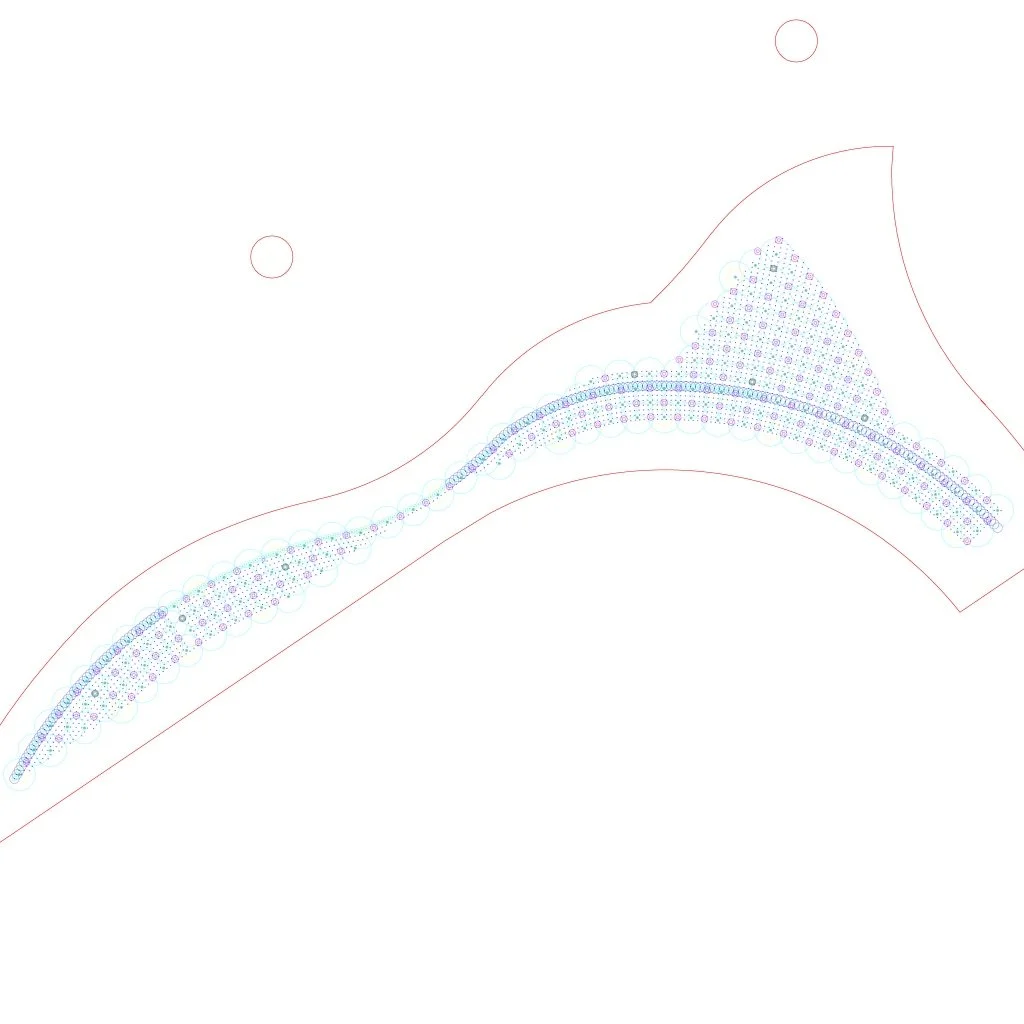

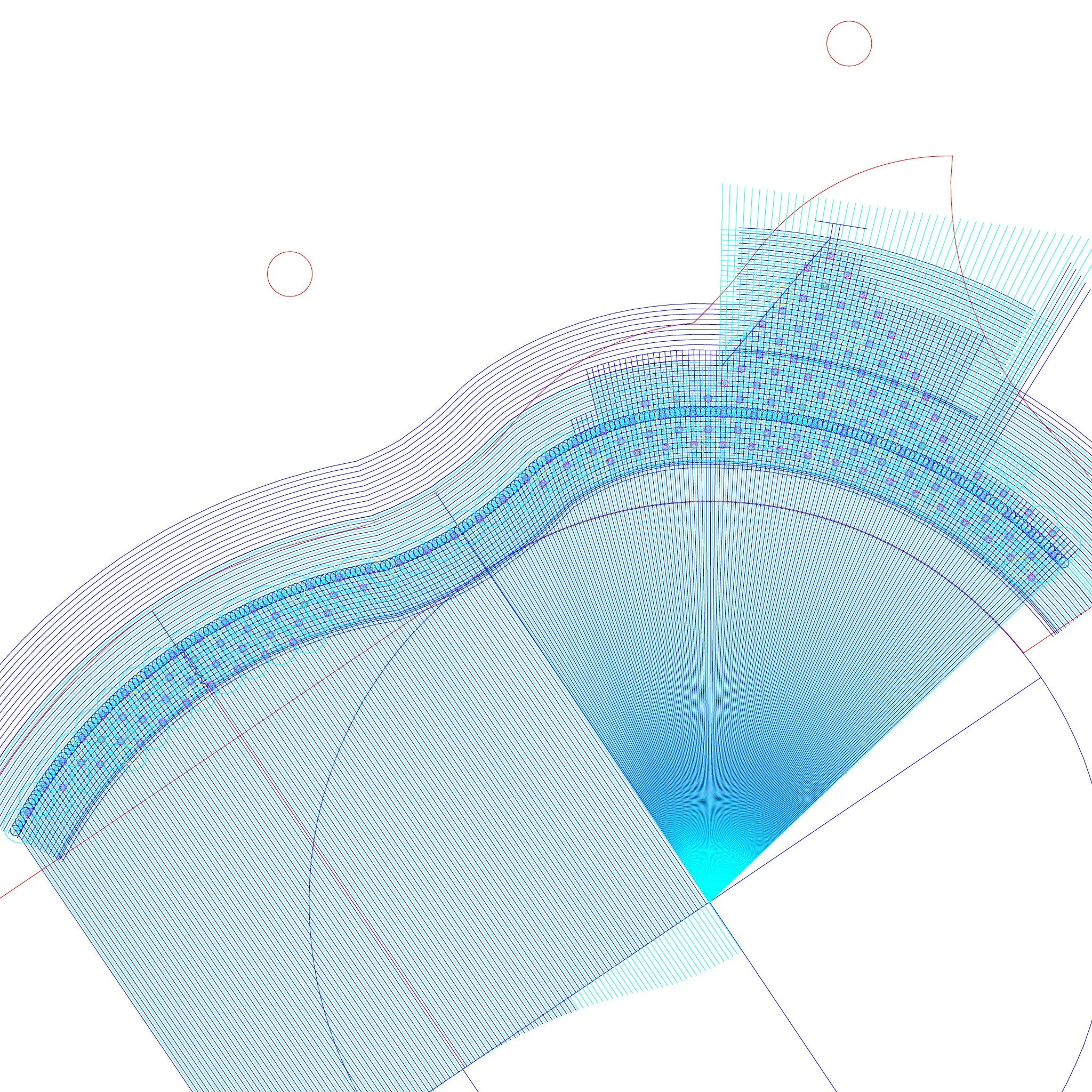

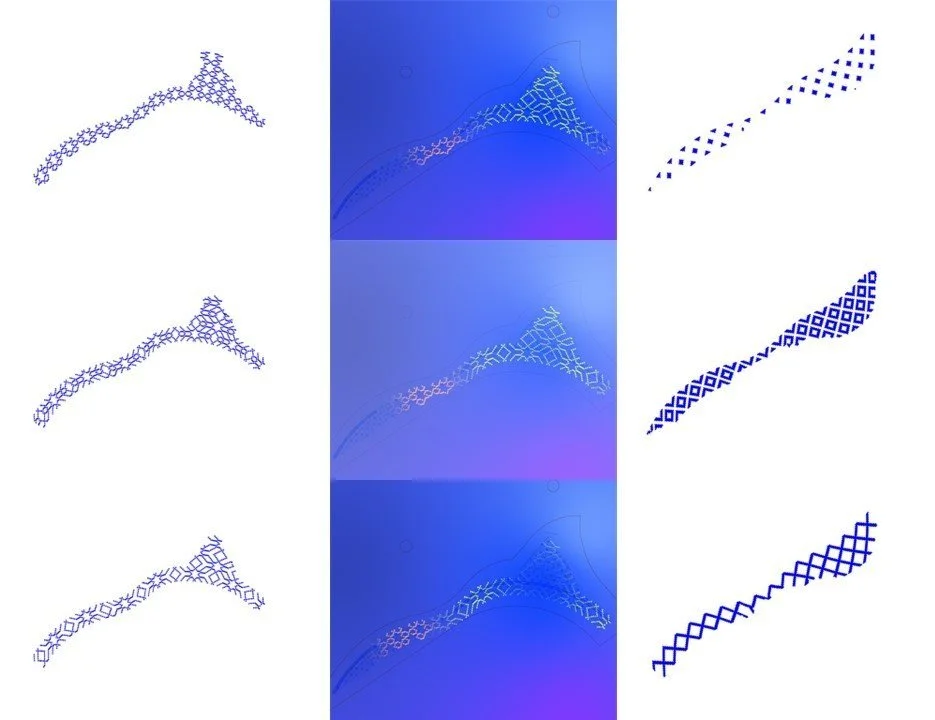

The nozzle layout on the left was provided by my coworker Senior Designer Andrea Silva for the Saadiyat Island development in Abu Dhabi. It is designed for a man-made body of water on site. There are many kinds of water devices specified in this design. I created a grid on the right to address each nozzle using Illustrator. Below you can see static and animated patterns I generated from the grid above using a combination of illustrator ‘livepaint’ and After Effects animated masks. Again, I used these animations to drive complex 3D animations rendered for previs.

There are some clips below from various projects. I created all the patterns, water choreography and animation you see in these clips using mostly Maya and After Effects. The backgrounds were painted by illustrators Erin Kim, Jennie Lee, and Yong Park at WET.

Version 3 (1-week timeline)

RewritingTool for AR/Unity

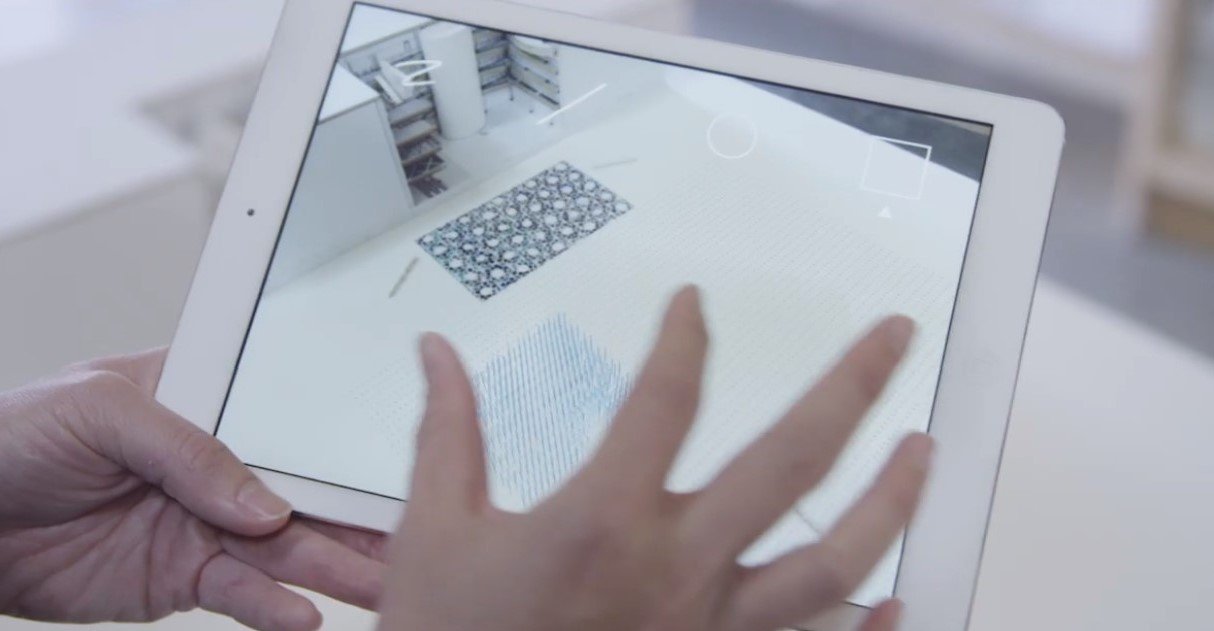

I decided to rewrite the tool in Unity 5 using JavaScript. I had two reasons for proposing this: #1 - I wanted to use Augmented Reality to integrate the water choreography into physical models for presentations. WET delivers a physical model for each concept presentation, and I wanted to incorporate my animations into the presentations in real-time using an iPad as a “magic window” that could reveal the choreography in relation to the physical surroundings. #2 - I wanted to let clients use the iPad screen as an interface to control and manipulate the choreography to get a sense of the process of creation themselves. I wanted to invite them into the process and have fun with it!

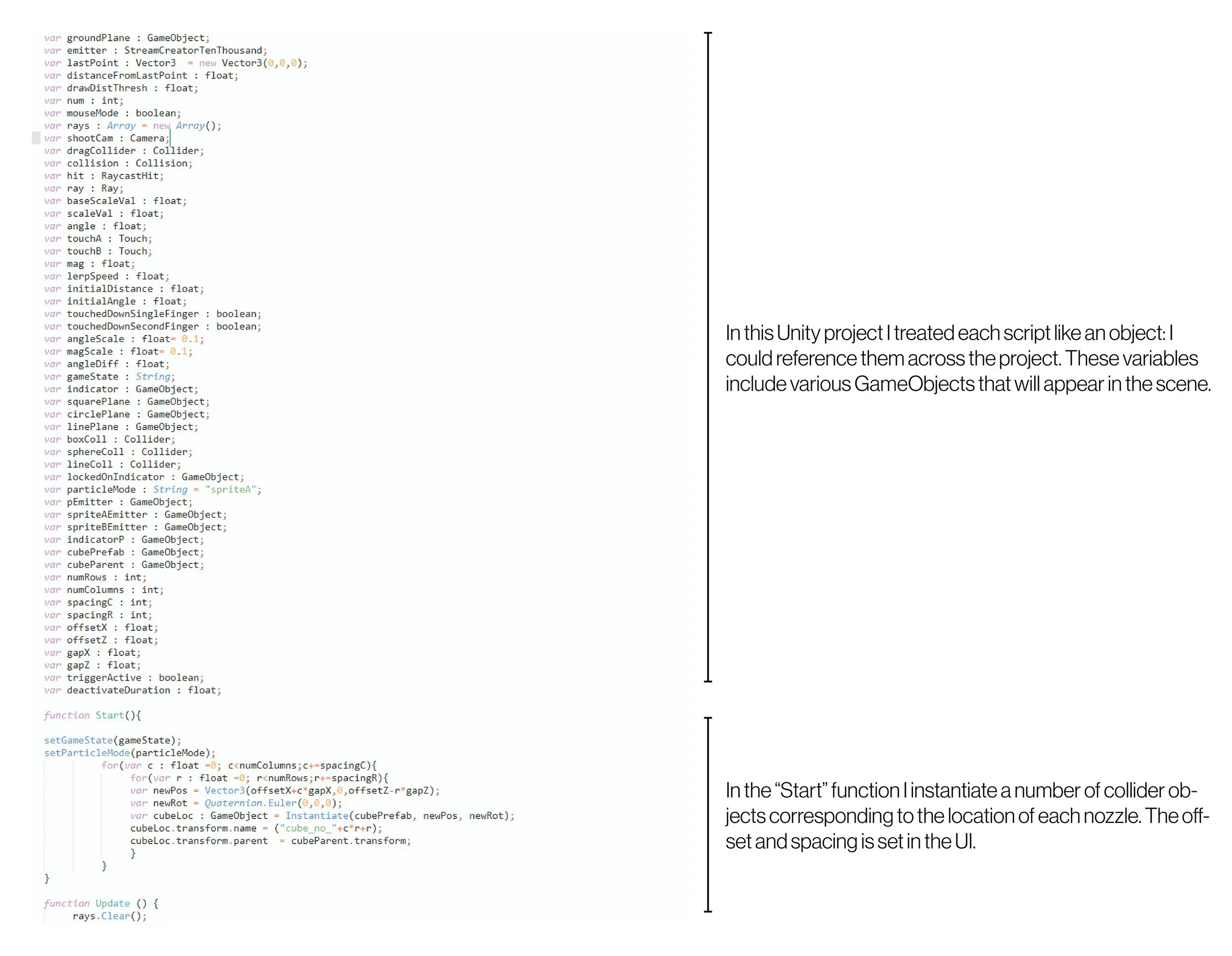

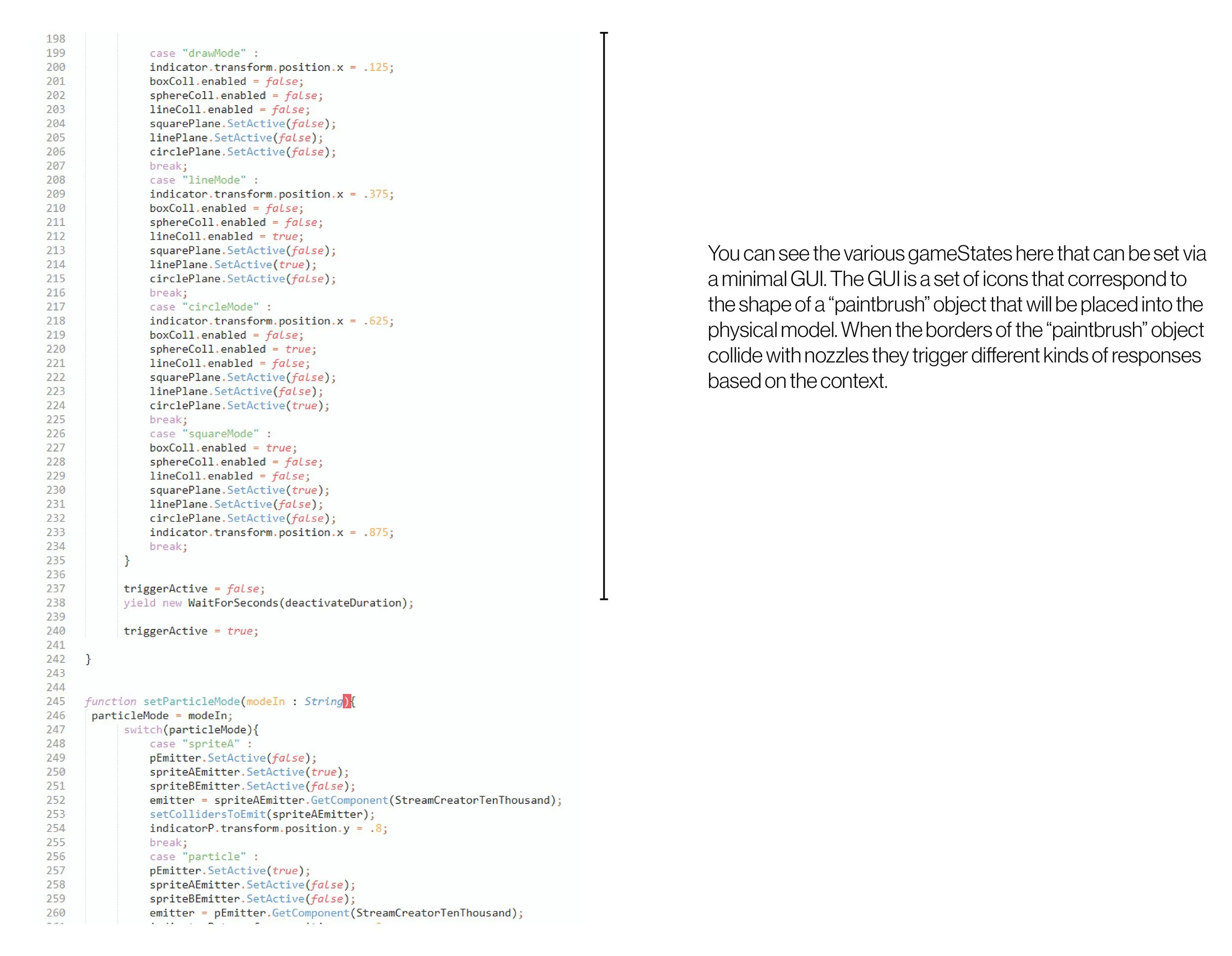

The following script is one of many JavaScript files that compose the Unity Project. I used the Vuforia library by Qualcomm for in-camera texture tracking. I treated each script like an object.

Below you can see me describing the concept behind this application. I created dozens of custom apps for concept presentations that accompanied physical models. These physical models would form the centerpiece of the presentation, and the iPad would help bring it to life.

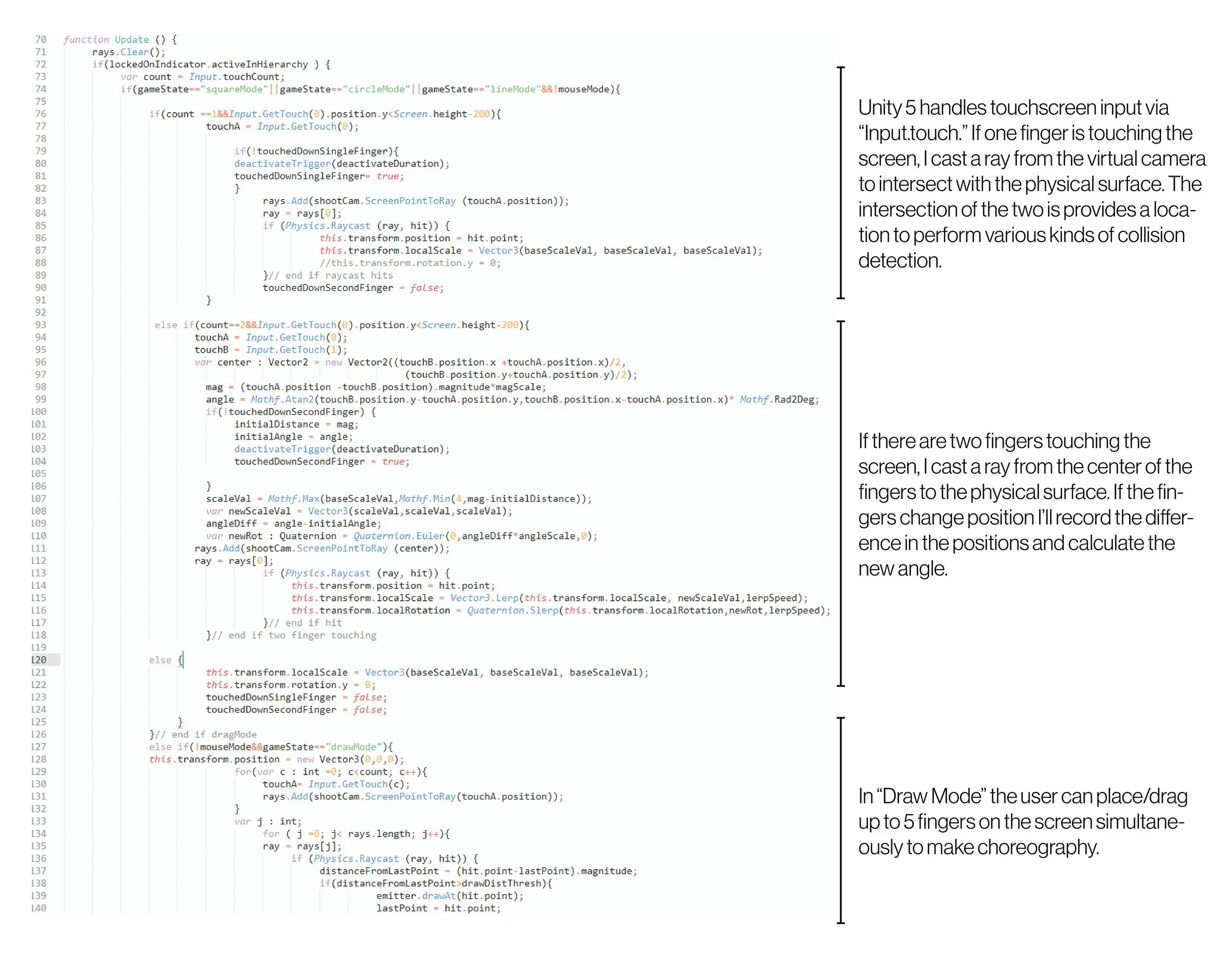

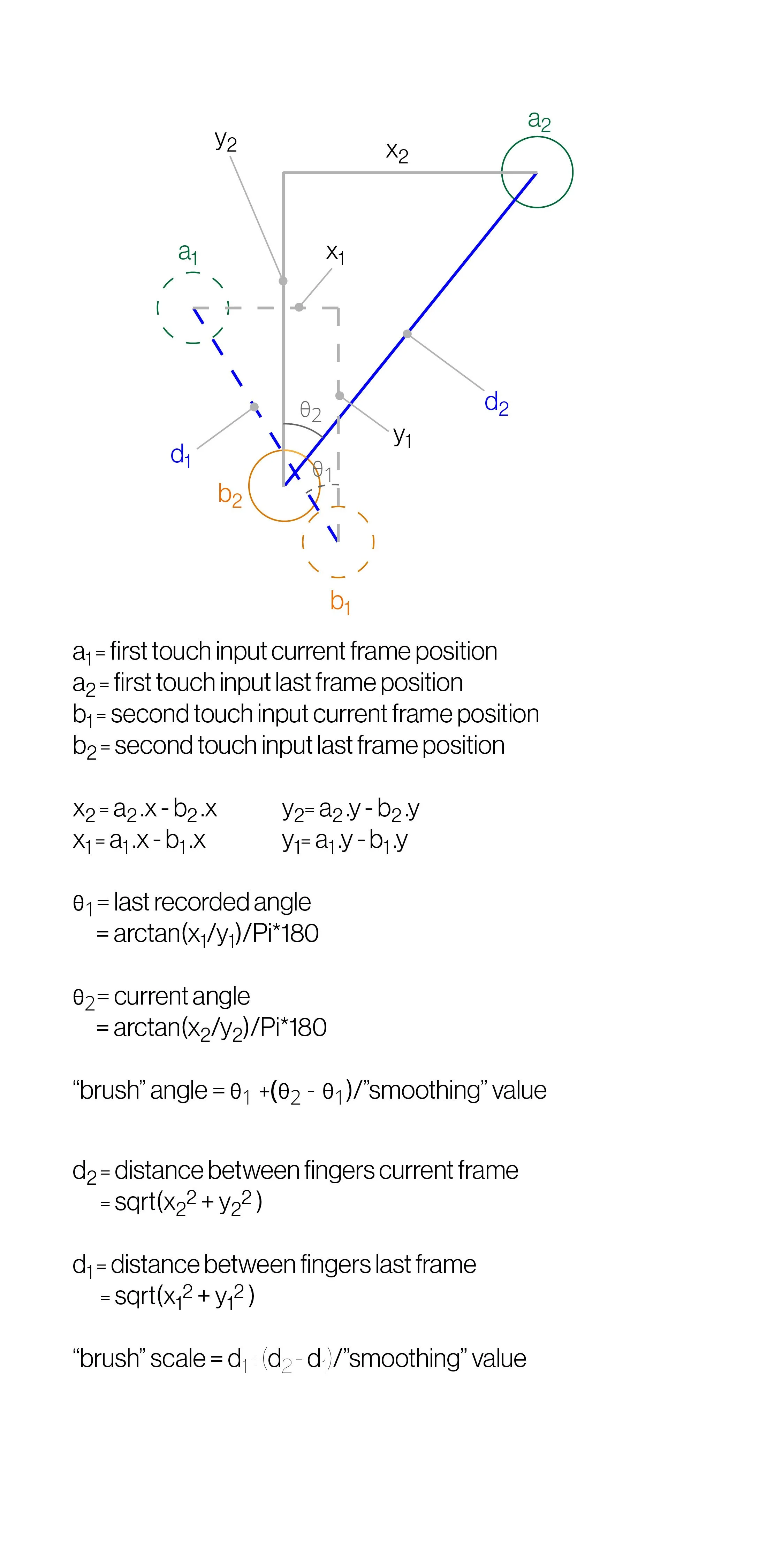

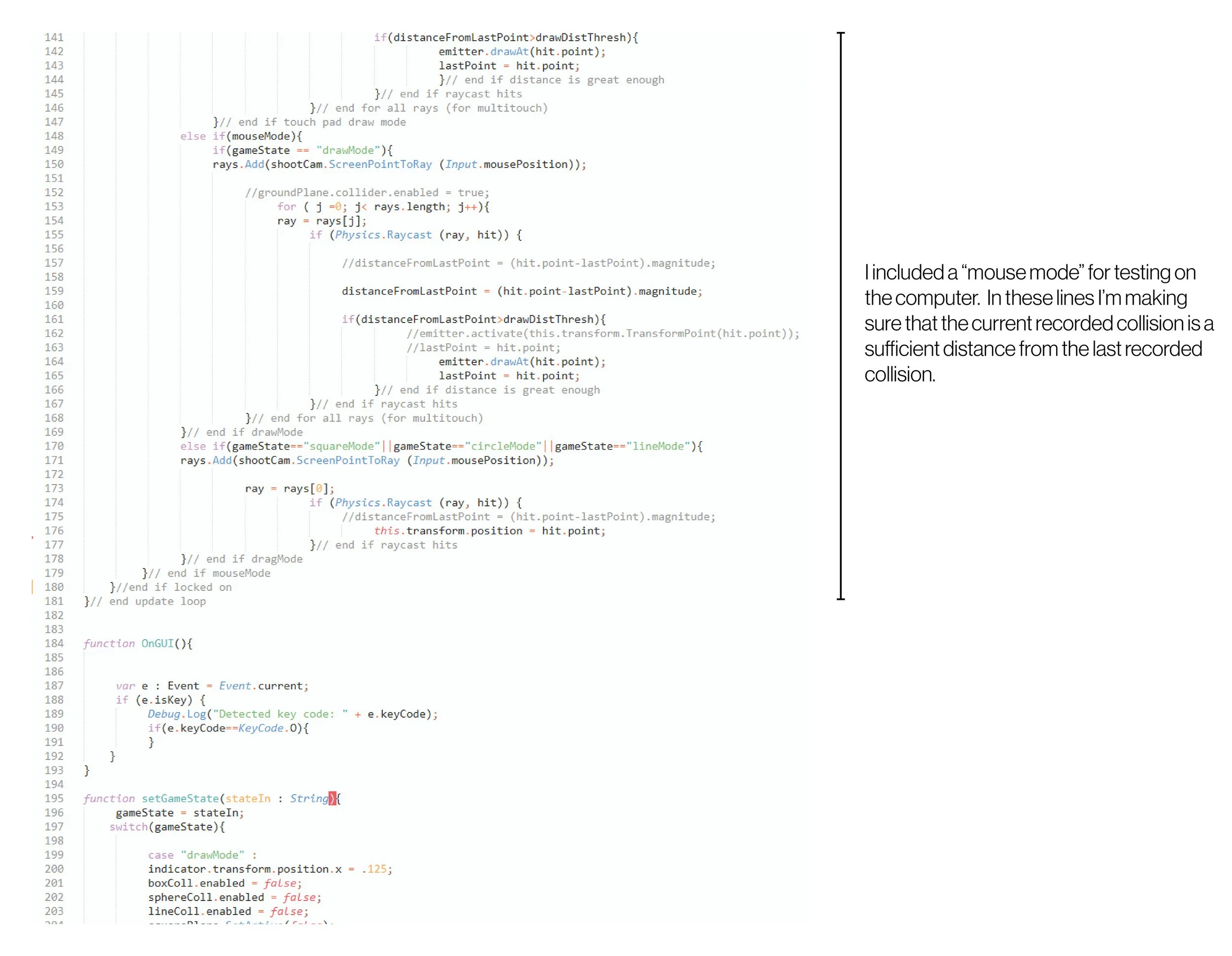

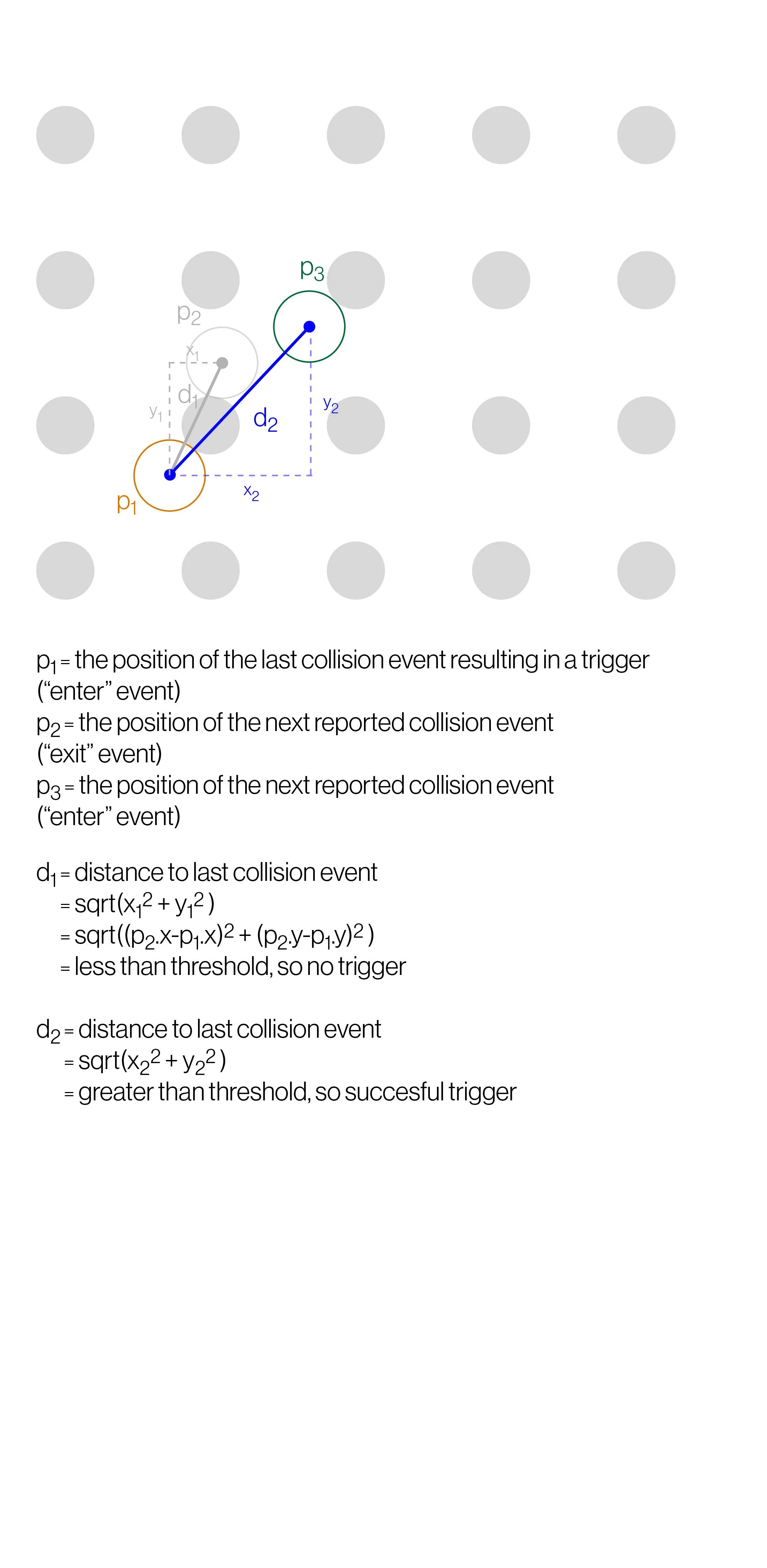

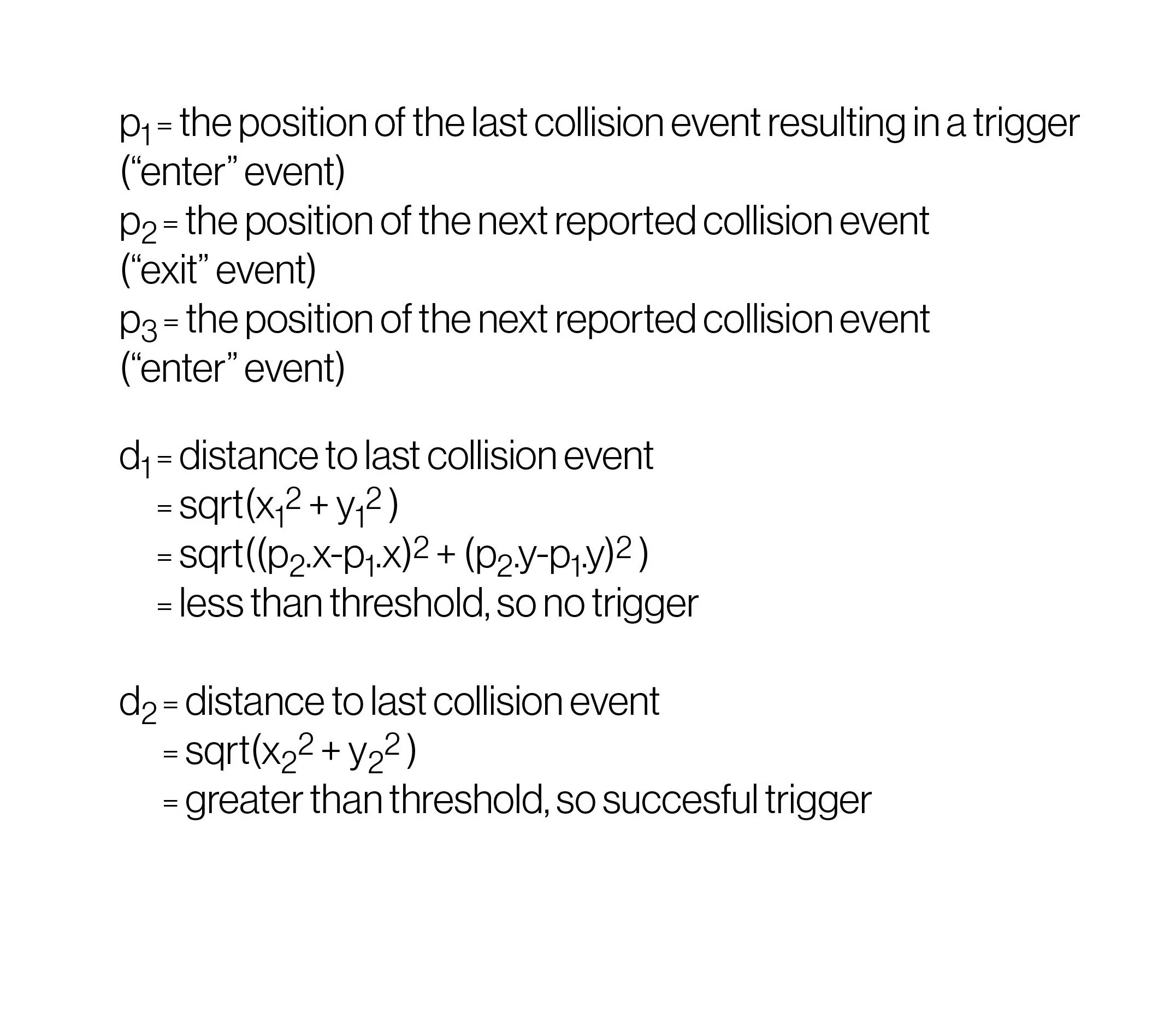

The following section manages touchscreen input. I would cast a ray from the virtual camera at the location of the touch to find the intersection with the surface.

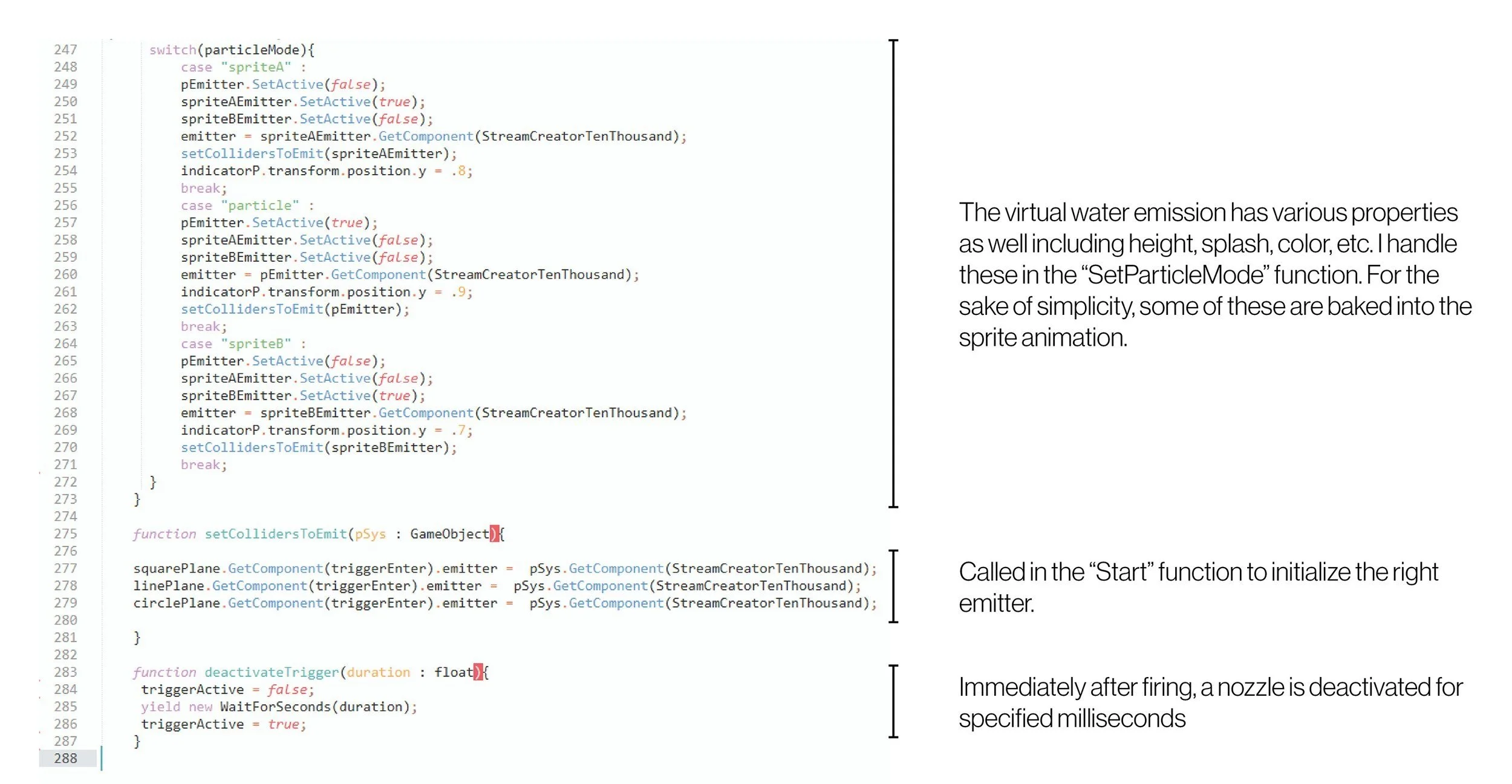

I made a very simple interface with several “brushes” to give the user (in this case a client) a sense of the thinking behind the choreography.

Version 3 (1-week timeline)

RewritingTool for AR/Unity

I decided to rewrite the tool in Unity 5 using JavaScript. I had two reasons for proposing this: #1 - I wanted to use Augmented Reality to integrate the water choreography into physical models for presentations. WET delivers a physical model for each concept presentation, and I wanted to incorporate my animations into the presentations in real-time using an iPad as a “magic window” that could reveal the choreography in relation to the physical surroundings. #2 - I wanted to let clients use the iPad screen as an interface to control and manipulate the choreography to get a sense of the process of creation themselves. I wanted to invite them into the process and have fun with it!

Below you can see me describing the concept behind this application. I created dozens of custom apps for concept presentations that accompanied physical models. These physical models would form the centerpiece of the presentation, and the iPad would help bring it to life.

The following script is one of many JavaScript files that compose the Unity Project. I used the Vuforia library by Qualcomm for in-camera texture tracking. I treated each script like an object.

The following section manages touchscreen input. I would cast a ray from the virtual camera at the location of the touch to find the intersection with the surface.

I made a very simple interface with several “brushes” to give the user (in this case a client) a sense of the thinking behind the choreography.

Version 4 (2-day timeline)

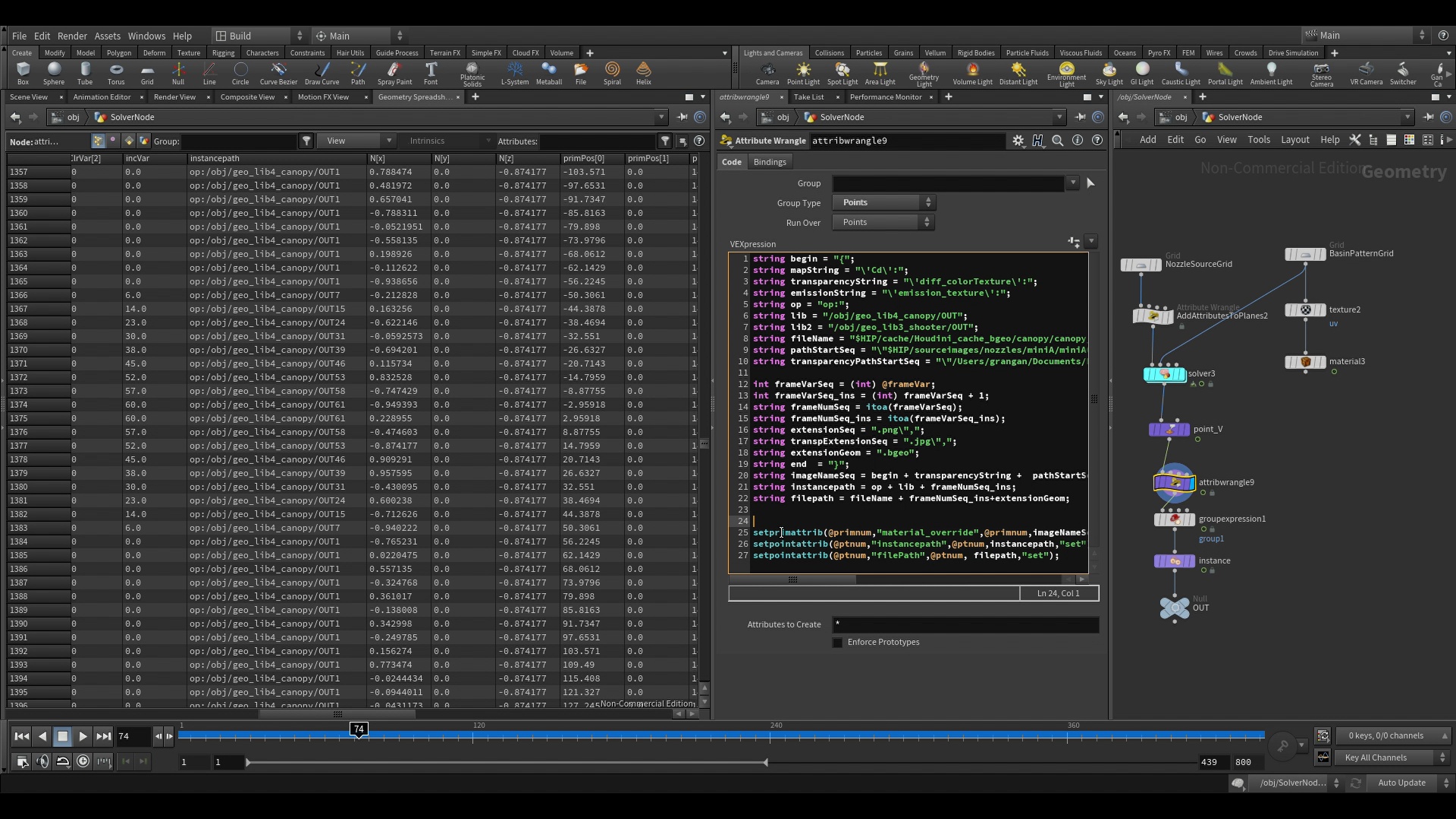

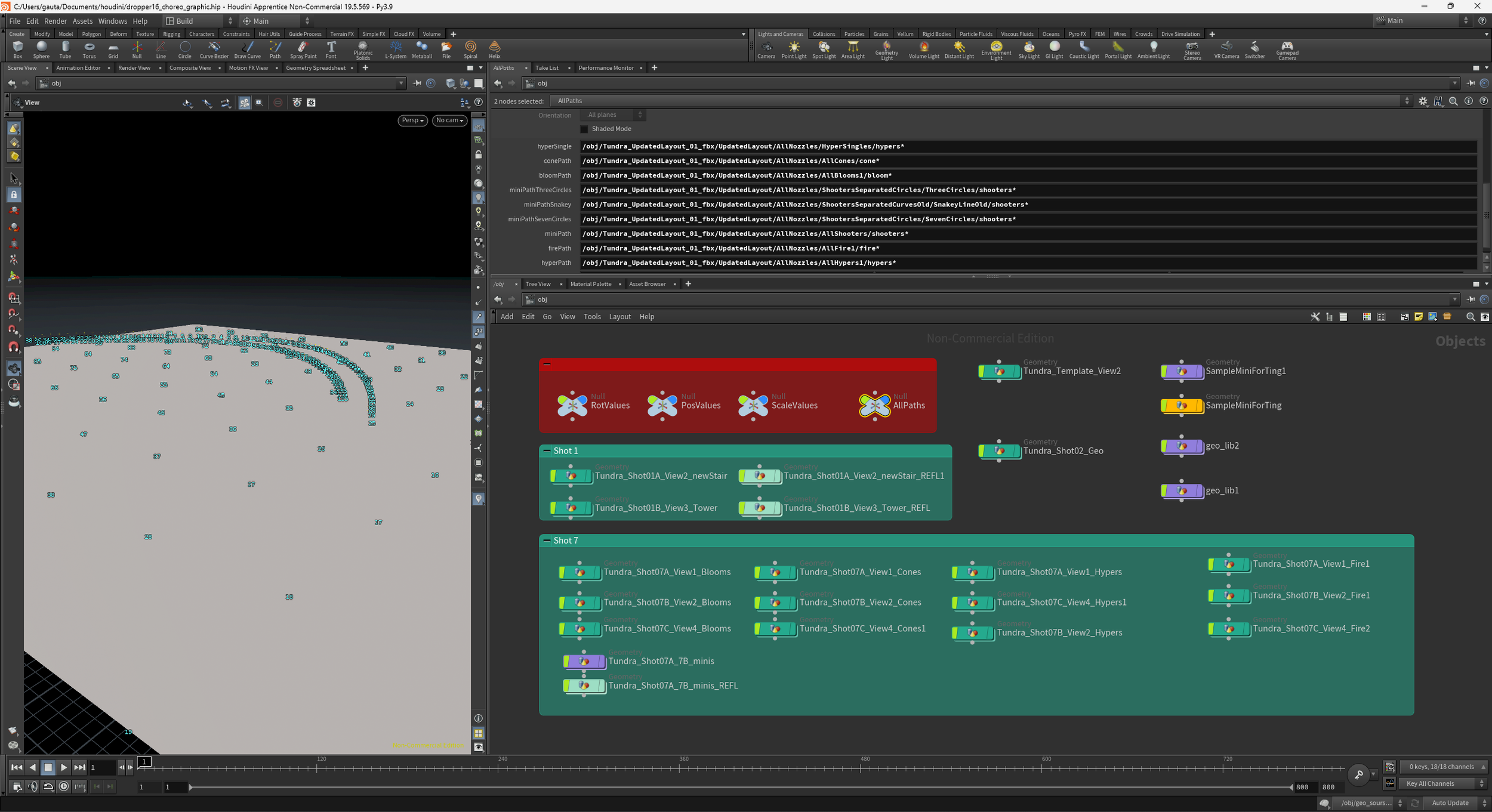

Rewriting the system for Houdini and VEX

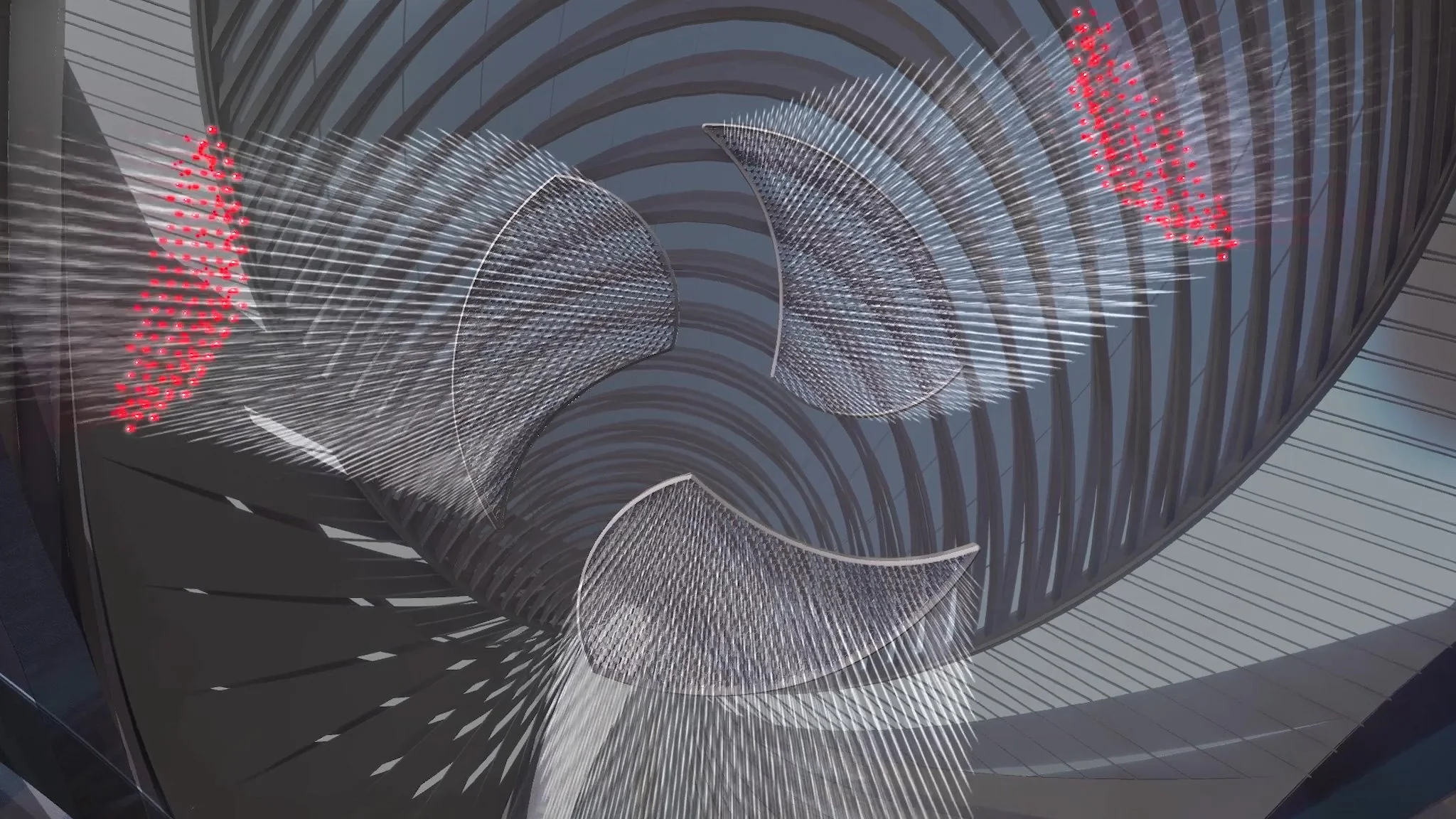

Finally, I decided to rewrite the choreography system for Houdini using the VEX scripting language and a Solver Node network. I decided to do this because I wanted to be able to render thousands of high-poly geometries and achieve higher quality renders.

I love Houdini’s node-based approach to organization, but it is not easy to store and increment variables from frame to frame. If you want data from the previous frame to influence the next frame, you need a solver node. With a solver node, I was able to recreate the same choreography system using VEX scripting and Point Wrangler nodes.

Below you can see a few frames from an animation I made for a proposal video. The background environment was modeled by my coworker Robert Pfleider and rendered in Unreal. I composited the Houdini renders on top of 3D flythroughs from Unreal.